OpenVINO™ Sample Application#

This tutorial tells you how to:

Run inference engine object detection on a pretrained network using the SSD method.

Run the detection demo application for a CPU and GPU.

Use a model optimizer to convert a TensorFlow* neural network model.

After conversion, run the neural network with inference engine for a CPU and GPU.

Run the Sample Application#

Check if your installation has the eiforamr-openvino-sdk Docker* image.

docker images |grep eiforamr-openvino-sdk #if you have it installed, the result is: eiforamr-openvino-sdk

Note

If the image is not installed, continuing with these steps triggers a build that takes longer than an hour (sometimes, a lot longer depending on the system resources and internet connection).

If the image is not installed, Intel® recommends installing the Robot Complete Kit with the Get Started Guide for Robots.

Check that EI for AMR environment is set:

echo $AMR_TUTORIALS # should output the path to EI for AMR tutorials /home/user/edge_insights_for_amr/Edge_Insights_for_Autonomous_Mobile_Robots_2023.1/AMR_containers/01_docker_sdk_env/docker_compose/05_tutorials

If nothing is output, refer to Get Started Guide for Robots Step 5 for information on how to configure the environment.

Run inference engine object detection on a pre-trained network using the Single-Shot multibox Detection (SSD) method. Run the detection demo application for a CPU:

CHOOSE_USER=root docker compose -f $AMR_TUTORIALS/openvino_CPU.tutorial.yml up

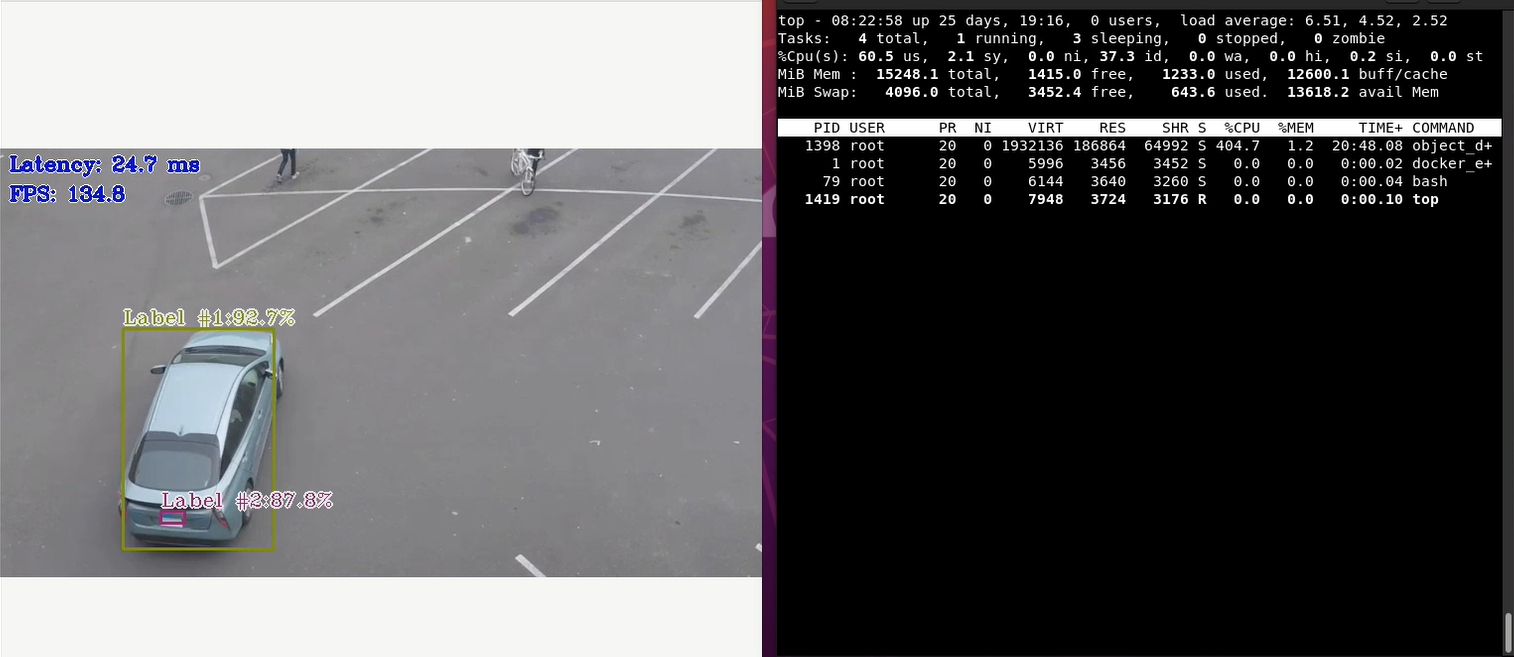

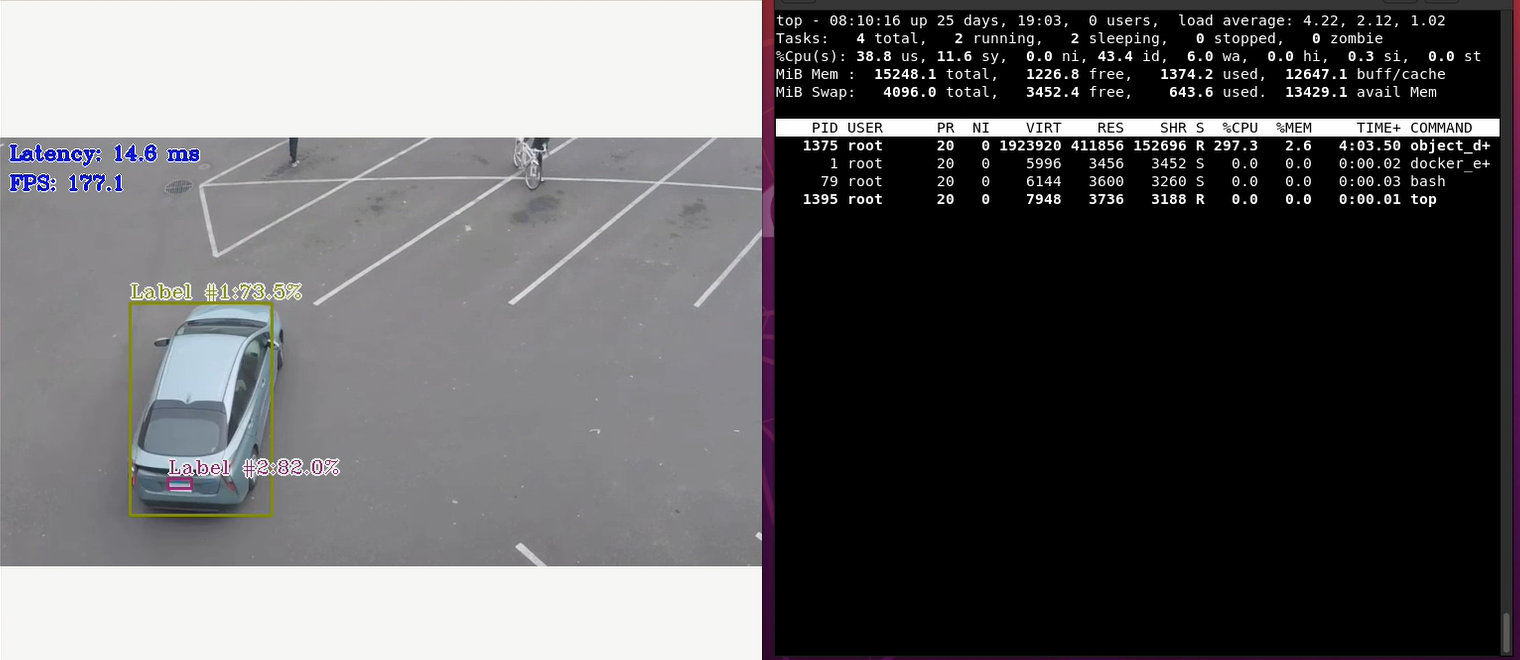

Expected output: A video in a loop with cars being detected and labeled by the Neural Network using a CPU

To close this, do the following:

Type

Ctrl-cin the terminal where you did the up command.Run this command to remove stopped containers:

CHOOSE_USER=root docker compose -f $AMR_TUTORIALS/openvino_CPU.tutorial.yml down

For an explanation of what happened, open the yml file. The file is well documented. To use your own files, place them in your home directory, and change the respective lines in the yml files to target them.

Run the detection demo application for the GPU:

CHOOSE_USER=root docker compose -f $AMR_TUTORIALS/openvino_GPU.tutorial.yml up

Expected output: A video in a loop with cars being detected and labeled by the Neural Network using a GPU

To close this, do the following:

Type

Ctrl-cin the terminal where you did the up command.Run this command to remove stopped containers:

CHOOSE_USER=root docker compose -f $AMR_TUTORIALS/openvino_GPU.tutorial.yml down

For an explanation of what happened, open the yml file. The file is well documented. To use your own files, place them in your home directory, and change the respective lines in the yml files to target them.

Troubleshooting#

If running the yml file gets stuck at downloading, edit the docker compose file of the OpenVINO™ tutorial:

gedit 01_docker_sdk_env/docker_compose/05_tutorials/openvino_CPU.tutorial.yml

Similarly, one can edit any other docker compose yml files located in the ‘05_tutorials’ folder to be executed behind a proxy.

In the docker compose file, add the following export commands after the line

echo "******* Set up the OpenVINO environment *******"replacing

http://<http_proxy>:portwith your actual environment proxy settings:export http_proxy="http://<http_proxy>:port" export https_proxy="http://<https_proxy>:port"

If you encounter the following issue when running the openvino samples,

amr-openvino | [ INFO ] Loading model to the device amr-openvino | Unable to init server: Could not connect: Connection refused amr-openvino | [ ERROR ] (-2:Unspecified error) Can't initialize GTK backend in function 'cvInitSystem'

install the following dependencies:

sudo apt install x11-xserver-utils xhost +

For general robot issues, go to: Troubleshooting for Robot Tutorials.