Follow-me with ADBSCAN and Gesture Control¶

This demo of the Follow-me algorithm shows a Robotics SDK application for following a target person where the movement of the robot can be controlled by the person’s location and hand gestures. The entire pipeline diagram can be found in Follow-me Algorithm page. This demo contains only the ADBSCAN and Gesture recognition modules in the input-processing application stack. No text-to-speech synthesis module is present in the output-processing application stack. This demo has been tested and validated on 12th Generation Intel® Core™ processors with Intel® Iris® Xe Integrated Graphics (known as Alder Lake-P). This tutorial describes how to launch the demo in Gazebo simulator.

Getting Started¶

Install the Deb package¶

Install ros-humble-followme-turtlebot3-gazebo Deb package from Intel® Robotics SDK APT repository. This is the wrapper package which will launch all of the dependencies in the backend.

Install Python Modules¶

This application uses Mediapipe Hands Framework for hand gesture recognition. Install the following modules as a prerequisite for the framework:

Run Demo with 2D Lidar¶

Run the following script to launch Gazebo simulator and ROS 2 rviz2.

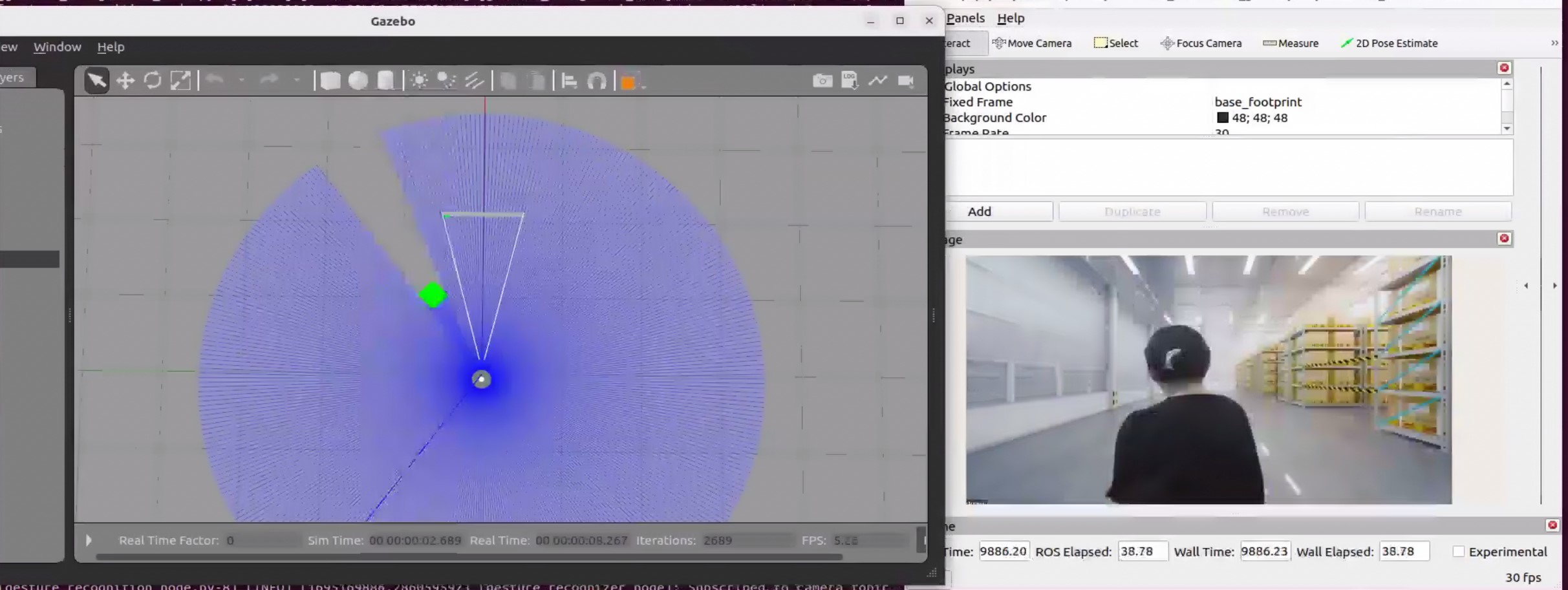

You will see two panels side-by-side: Gazebo GUI on the left and ROS 2 rviz display on the right.

The green square robot is a guide robot (namely, the target), which will follow a pre-defined trajectory.

Gray circular robot is a TurtleBot3, which will follow the guide robot. TurtleBot3 is equipped with a 2D Lidar and a Intel® RealSense™ depth camera. In this demo, the 2D Lidar is used as the input topic.

Both of the following conditions need to be fulfilled to start the TurtleBot3:

The target (guide robot) will be within the tracking radius (a reconfigurable parameter in /opt/ros/humble/share/adbscan_ros2_follow_me/config/adbscan_sub_2D.yaml) of the TurtleBot3.

The gesture (visualized in the

/imagetopic in ROS 2 rviz2) of the target isthumbs up.

The stop condition for the TurtleBot3 is fulfilled when either one of the following conditions are true:

The target (guide robot) moves to a distance of more than the tracking radius (a reconfigurable parameter in /opt/ros/humble/share/adbscan_ros2_follow_me/config/adbscan_sub_2D.yaml) from the TurtleBot3.

The gesture (visualized in the

/imagetopic in ROS 2 rviz2) of the target isthumbs down.

Run Demo with Intel® RealSense™ Camera¶

Execute the following commands one by one in three separate terminals.

Terminal 1: This command will open Gazebo simulator and ROS 2 rviz2.

Terminal 2: This command will launch the ADBSCAN Deb package. It runs the ADBScan node on the point cloud data to detect the location of the target.

Terminal 3: This command will run the gesture recognition package.

In this demo, Intel® RealSense™ camera of the TurtleBot3 is selected as the input point cloud sensor. After running all of the above commands, you will observe similar behavior of the TurtleBot3 and guide robot in the Gazebo GUI as in Run Demo with 2D Lidar

Note

There are reconfigurable parameters in /opt/ros/humble/share/adbscan_ros2_follow_me/config/ directory for both LIDAR (adbscan_sub_2D.yaml) and Intel® RealSense™ camera (adbscan_sub_RS.yaml). The user can modify parameters depending on the respective robot, sensor configuration and environments (if required) before running the tutorial. Find a brief description of the parameters in the following table:

|

Type of the point cloud sensor. For Intel® RealSense™ camera and LIDAR inputs, the default value is set to |

|

Name of the topic publishing point cloud data. |

|

If this flag is set to |

|

This is the downsampling rate of the original point cloud data. Default value = 15 (i.e. every 15-th data in the original point cloud is sampled and passed to the core ADBSCAN algorithm). |

|

Point cloud data with x-coordinate > |

|

Point cloud data with y-coordinate > |

|

Point cloud data with z-coordinate < |

|

Filtering in the z-direction will be applied only if this value is non-zero. This option will be ignored in case of 2D Lidar. |

|

These are the coefficients used to calculate adaptive parameters of the ADBSCAN algorithm. These values are pre-computed and recommended to keep unchanged. |

|

This value describes the initial target location. The person needs to be at a distance of |

|

This is the maximum distance that the robot can follow. If the person moves at a distance > |

|

This value describes the safe distance the robot will always maintain with the target person. If the person moves closer than |

|

Maximum linear velocity of the robot. |

|

Maximum angular velocity of the robot. |

|

The robot will keep following the target for |

|

The robot will keep following the target as long as the current target location = previous location +/- |

Troubleshooting¶

Failed to install Deb package: Please make sure to run

sudo apt updatebefore installing the necessary Deb packages.You can stop the demo anytime by pressing

ctrl-C. If the Gazebo simulator freezes or does not stop, please use the following command in a terminal: