Follow-me with ADBSCAN, Gesture and Audio Control¶

This demo of the Follow-me algorithm shows a Robotics SDK application for following a target person where the movement of the robot can be controlled by the person’s location, hand gestures as well as audio command. The entire pipeline diagram can be found in Follow-me Algorithm page. It takes point cloud sensor (2D Lidar/depth camera) as well as RGB camera image as inputs. These inputs are passed through Intel®-patented Adaptive DBScan and a deep-learning-based gesture recognition pipeline, respectively to publish motion command messages for a differential drive robot. It also takes recorded audio commands for starting and stopping the robot movement. Moreover, the demo is equipped with a text-to-speech synthesis model to narrate the robot’s activity over the course of its movement.

This demo has been tested and validated on 13th Generation Intel® Core™ processors with Intel® Iris® Xe Integrated Graphics and 12th Generation Intel® Core™ processors with Intel® Iris® Xe Integrated Graphics. This tutorial describes how to launch the demo in the Gazebo simulator.

Getting Started¶

Install the Deb packages¶

Install ros-humble-followme-turtlebot3-gazebo and ros-humble-text-to-speech-pkg Deb packages from Intel® Robotics SDK APT repository. ros-humble-followme-turtlebot3-gazebo is the wrapper package for the demo which will install all of the dependencies in the backend.

Install Pre-requisite Libraries¶

Install the pre-requisite modules for running the framework:

If you are under a proxy network, please make sure to use --proxy <http-proxy-url> with the pip install command.

Run Demo with 2D Lidar¶

Please make sure to source the /opt/ros/humble/setup.bash file at first before executing any command in a new terminal. You can get more details in Prepare the Target System page.

Run the following commands one by one in five separate terminals:

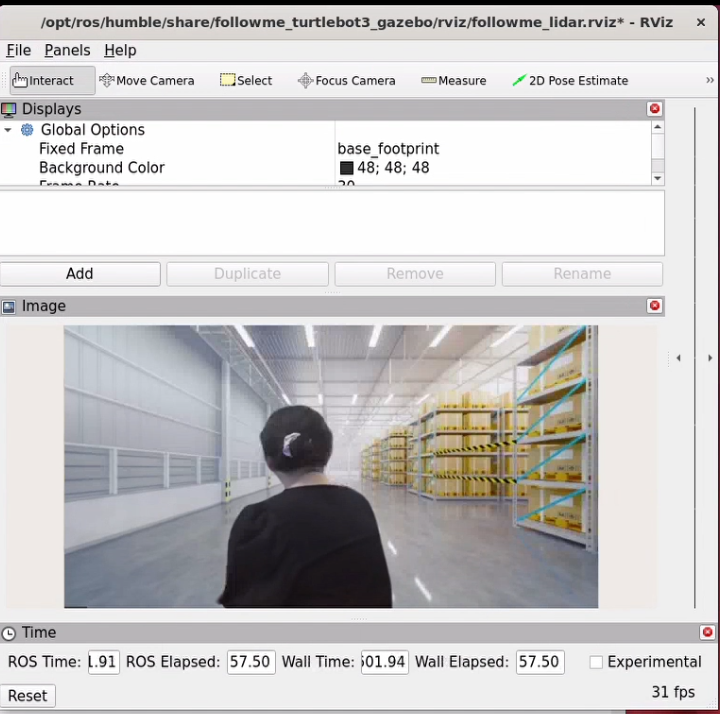

Terminal 1: This command will open ROS 2 rviz2.

You will see the ROS 2 rviz2 with a panel for Image visualization. It will display the published RGB image in the simulated RGB camera.

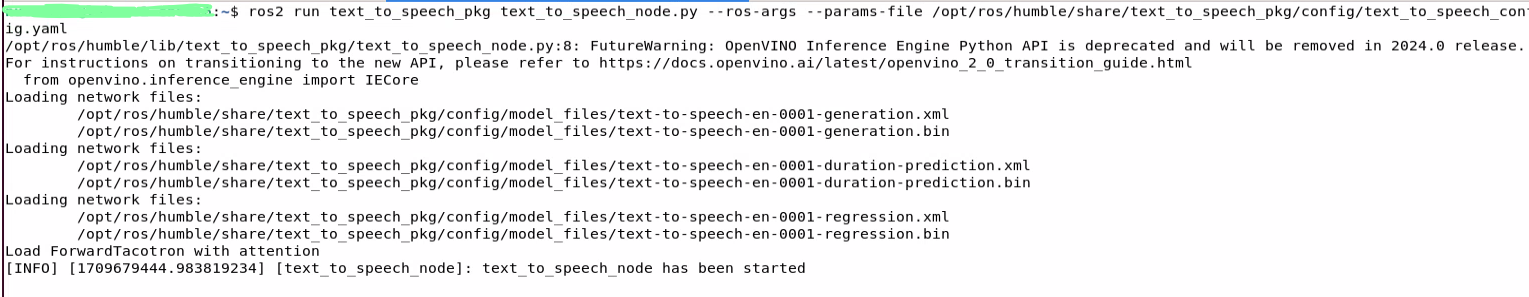

Terminal 2: This command will launch

ros-humble-text-to-speech-pkg.

You will see the ROS 2 node starting up and loading the parameter files for the underlying neural networks. This operation may take ~5-10 seconds depending on the system speed.

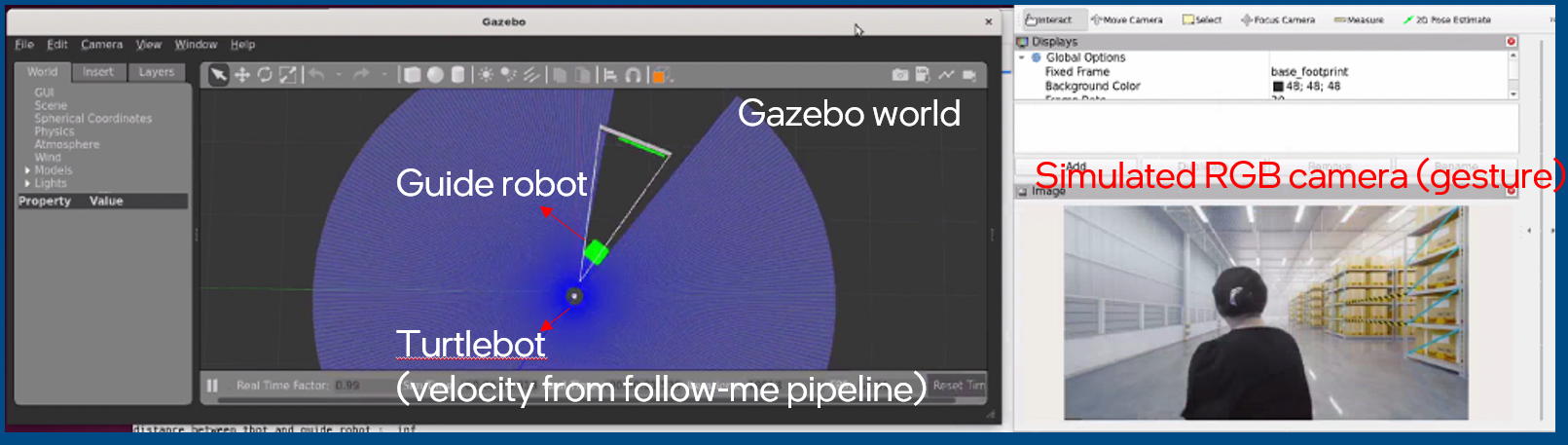

Terminal 3: This command will launch Gazebo.

You will see the Gazebo GUI with two simulated robots in an empty Gazebo world. We suggest to rescale and place the Gazebo and rviz panels side by side (like the following picture) for better visualization of the demo.

The green square robot is a guide robot (namely, the target), which will follow a pre-defined trajectory.

Gray circular robot is a TurtleBot3, which will follow the guide robot. TurtleBot3 is equipped with a 2D Lidar and an Intel® RealSense™ depth camera. In this demo, the 2D Lidar is selected as the point cloud input.

In this demo, we used a pre-defined trajectory for the guide robot and published gesture image as well as pre-recorded audio at different points of time to show start, follow and stop activities of the TurtleBot3.

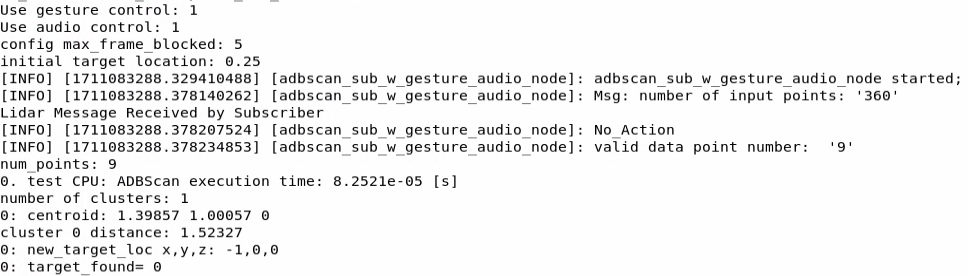

Terminal 4: This command will launch the

adbscannode, which will publish twist msg to thetb3/cmd_veltopic:

You will view the following information in the terminal.

Terminal 5: This command will launch the pre-defined trajectory for the guide robot as well as the simulated gesture images and pre-recorded audio publisher nodes:

Note

If you are running the demo in 13th Generation Intel® Core™ processors with Intel® Iris® Xe Integrated Graphics (known as Raptor Lake-P), please replace the commands in terminal 5 with the following:

This command will display the following information:

As soon as the last command is executed, you will view that the guide robot starts moving towards TurtleBot3. In order to start the TurtleBot3, condition 1 and either one of the conditions 2 or 3 from the following list need to be true:

The target (guide robot) is located within the tracking radius (a reconfigurable parameter in the parameter file: /opt/ros/humble/share/adbscan_ros2_follow_me/config/adbscan_sub_2D.yaml) of the TurtleBot3.

The gesture (visualized in the

/imagetopic in ROS 2 rviz2) of the target isthumbs up.The detected audio from the recording is

Start Following.

The stop condition for the TurtleBot3 is fulfilled when any one of the following conditions holds true:

The target (guide robot) moves to a distance of more than the tracking radius (a reconfigurable parameter in the parameter file: /opt/ros/humble/share/adbscan_ros2_follow_me/config/adbscan_sub_2D.yaml) from the TurtleBot3.

The gesture (visualized in the

/imagetopic in ROS 2 rviz2) of the target isthumbs down.The detected audio from the recording is

Stop Following.

The demo will narrate the detected gesture, audio and target location during the start and stop activity of the TurtleBot3.

Note

The current version of the demo only supports

Start,Stop,Start FollowingandStop Followingaudio commands. If the detected audio does not match any of the supported commands, the audio control will be ignored and the movement of the robot will be determined by the remaining criteria. Similarly, hand gesture control will be ignored if it does not matchthumbs uporthumbs down. Thereby, any undesired manipulation of the robot is blocked.

Run Demo with Intel® RealSense™ Camera¶

Please make sure to source the /opt/ros/humble/setup.bash file at first before executing any command in a new terminal. You can get more details in Prepare the Target System page.

Execute the following commands one by one in three separate terminals:

Terminal 1: This command will open ROS 2 rviz2.

You will see ROS 2 rviz2 GUI with a panel for Image visualization. It will display the published RGB image in the simulated RGB camera.

Terminal 2: This command will launch

ros-humble-text-to-speech-pkg.

You will see the ROS 2 node starting up and loading the parameter files for the underlying neural networks. This operation may take ~5-10 seconds depending on the system speed.

Terminal 3: This command will launch Gazebo.

Terminal 4: This command will launch the

adbscannode, which will publish twist msg to thetb3/cmd_veltopic:

In this instance, we execute adbscan with the parameter file for Intel® RealSense™ camera input: adbscan_sub_RS.yaml.

You will view the following information in the terminal.

Terminal 5: This command will launch the pre-defined trajectory for the guide robot as well as the simulated gesture images and pre-recorded audio publisher nodes:

Note

If you are running the demo in 13th Generation Intel® Core™ processors with Intel® Iris® Xe Integrated Graphics (known as Raptor Lake-P), please replace the commands in terminal 5 with the following:

After running all of the above commands, you will observe similar behavior of the TurtleBot3 and guide robot in the Gazebo GUI as in Run Demo with 2D Lidar.

Note

There are reconfigurable parameters in /opt/ros/humble/share/adbscan_ros2_follow_me/config/ directory for both LIDAR (adbscan_sub_2D.yaml) and Intel® RealSense™ camera (adbscan_sub_RS.yaml). The user can modify the parameters depending on the respective robot, sensor configuration and environments (if required) before running the tutorial. Find a brief description of the parameters in the following table.

|

Type of the point cloud sensor. For Intel® RealSense™ camera and LIDAR inputs, the default value is set to |

|

Name of the topic publishing point cloud data. |

|

If this flag is set to |

|

This is the downsampling rate of the original point cloud data. Default value = 15 (i.e. every 15-th data in the original point cloud is sampled and passed to the core ADBSCAN algorithm). |

|

Point cloud data with x-coordinate > |

|

Point cloud data with y-coordinate > |

|

Point cloud data with z-coordinate < |

|

Filtering in the z-direction will be applied only if this value is non-zero. This option will be ignored in case of 2D Lidar. |

|

These are the coefficients used to calculate adaptive parameters of the ADBSCAN algorithm. These values are pre-computed and recommended to keep unchanged. |

|

This value describes the initial target location. The person needs to be at a distance of |

|

This is the maximum distance that the robot can follow. If the person moves at a distance > |

|

This value describes the safe distance the robot will always maintain with the target person. If the person moves closer than |

|

Maximum linear velocity of the robot. |

|

Maximum angular velocity of the robot. |

|

The robot will keep following the target for |

|

The robot will keep following the target as long as the current target location = previous location +/- |

Troubleshooting¶

Failed to install Deb package: Please make sure to run

sudo apt updatebefore installing the necessary Deb packages.Please make sure to prepare your environment before executing ROS 2 commands in a new terminal. You can find the instructions in Prepare the Target System page.

You can stop the demo anytime by pressing

ctrl-C. If the Gazebo simulator freezes or does not stop, please use the following command in a terminal:We used simpleaudio python library to playback audio. The necessary dependencies are installed in the Install Pre-requisite Libraries step. Please make sure that the system microphones are available and unmuted in order to listen to played audio during the demo.