Collaborative Visual SLAM¶

Collaborative Visual SLAM is compiled natively for both Intel® Core™ and Intel® Atom® processor-based systems. In addition, GPU acceleration may be enabled on selected Intel® Core™ processor-based system. The default installation of Collaborative Visual SLAM is designed to run on the widest range of processors.

Collaborative Visual SLAM Versions Available¶

Intel SSE-only CPU instruction accelerated package for Collaborative SLAM (installed by default):

Intel AVX2 CPU instruction accelerated package for Collaborative SLAM:

Intel GPU Level-Zero accelerated package for Collaborative SLAM:

The tutorials below should work for any compatible version of Collaborative Visual SLAM that is installed. Use the instructions above to switch between version to experiment with different accelerations.

Note

When installing a collaborative SLAM package, use the specified command line tool below to identify the integrated GPU on your system. Once determined, select the GPU during the installation process. Select option

3. gen12lp, if unsure.Configuring liborb-lze ---------------------- Select the Intel integrated GPU present on this system. Hint: In another terminal install 'intel-gpu-tools' (sudo apt install intel-gpu-tools), then execute 'intel_gpu_top' to view CPU/GPU details (sudo intel_gpu_top -L) 1. gen9 2. gen11 3. gen12lp Select GPU Generation (1, 2, or 3):

Select a Collaborative Visual SLAM Tutorial to Run¶

Collaborative Visual SLAM with Two Robots: uses as input two ROS 2 bags that simulate two robots exploring the same area

The ROS 2 tool rviz2 is used to visualize the two robots, the server, and how the server merges the two local maps of the robots into one common map.

The output includes the estimated pose of the camera and visualization of the internal map.

All input and output are in standard ROS 2 formats.

Collaborative Visual SLAM with FastMapping Enabled: uses as an input a ROS 2 bag that simulates a robot exploring an area

Collaborative visual SLAM has the FastMapping algorithm integrated.

For more information on FastMapping, see How it Works .

The ROS 2 tool rviz2 is used to visualize the robot exploring the area and how FastMapping creates the 2D and 3D maps.

Collaborative Visual SLAM with Multi-Camera Feature: uses as an input a ROS 2 bag that simulates a robot with two Intel® RealSense™ cameras exploring an area.

Collaborative visual SLAM enables tracker frame-level pose fusion using Kalman Filter (part of loosely coupled solution for multi-camera feature).

The ROS 2 tool rviz2 is used to visualize estimated pose of different cameras.

Collaborative Visual SLAM with 2D Lidar Enabled: uses as an input a ROS 2 bag that simulates a robot exploring an area

Collaborative visual SLAM enables 2D Lidar based frame-to-frame tracking for RGBD input.

The ROS 2 tool rviz2 is used to visualize the trajectory of robot when 2D Lidar is used.

Collaborative Visual SLAM with Region-wise Remapping Feature: uses as an input a ROS 2 bag that simulates a robot to update pre-constructed keyframe/landmark map and 3D octree map with manual region input from user in remapping mode.

The ROS 2 tool rviz2 is used to visualize the process of region-wise remapping feature including loading and updating the pre-constructed keyframe/landmark and 3D octree map.

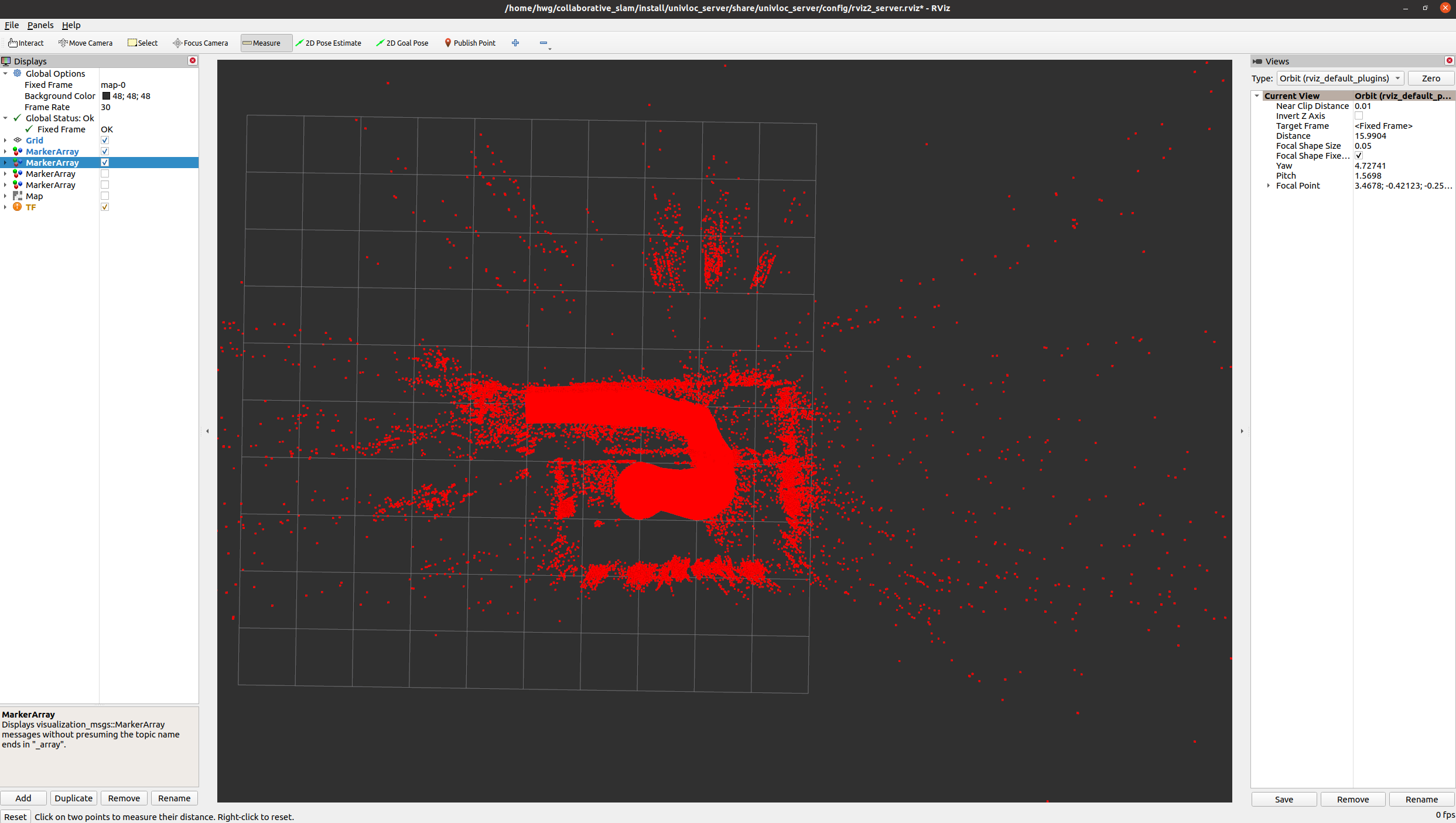

Collaborative Visual SLAM with Two Robots¶

To download and install the tutorial run the command below:

Note: In this installation package, there are two substantial ROS 2 bag files, which are approximately 6.8 GB and 2.6 GB in size.

Run the Collaborative Visual SLAM algorithm using two bags simulating two robots going through the same area:

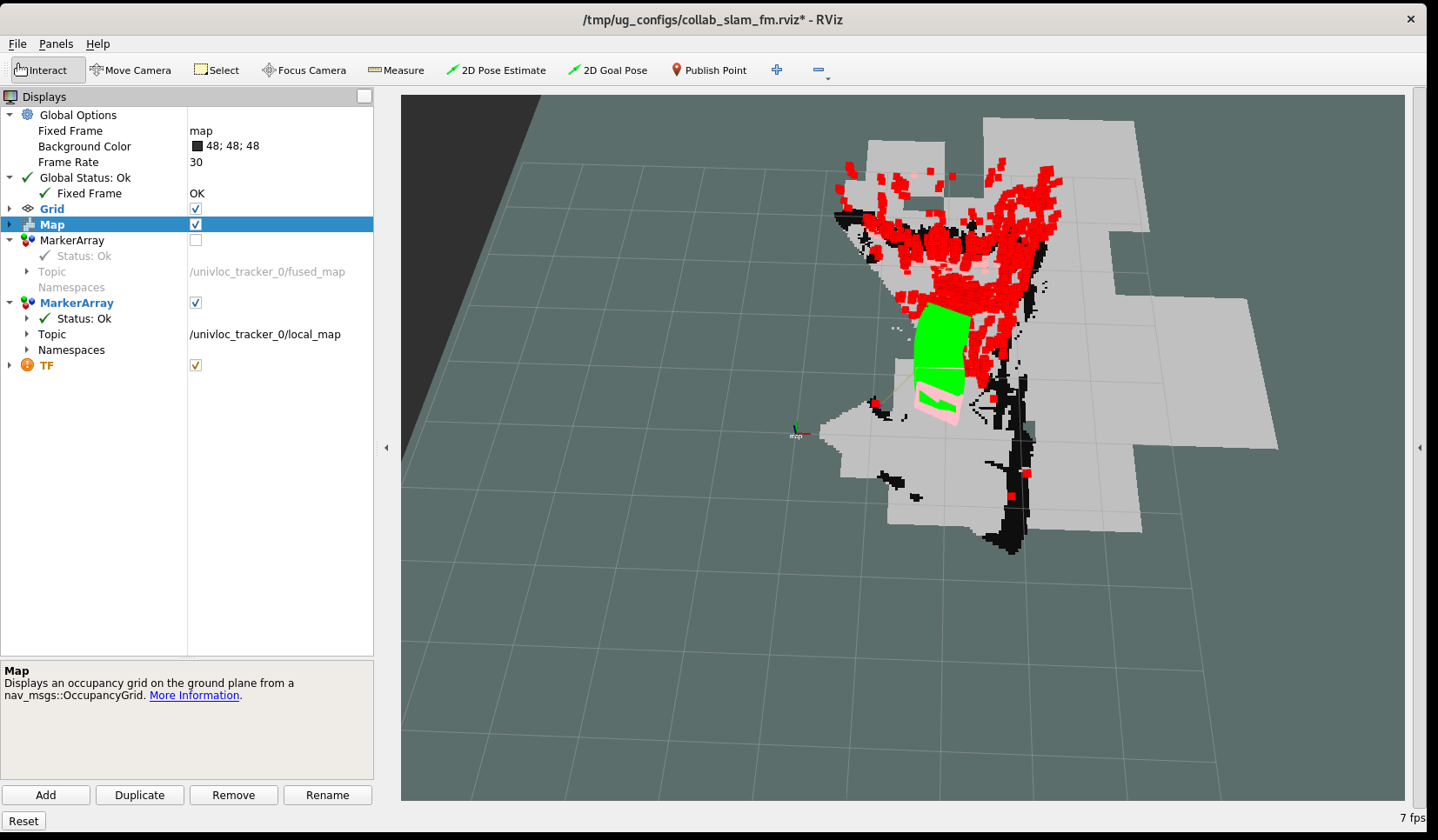

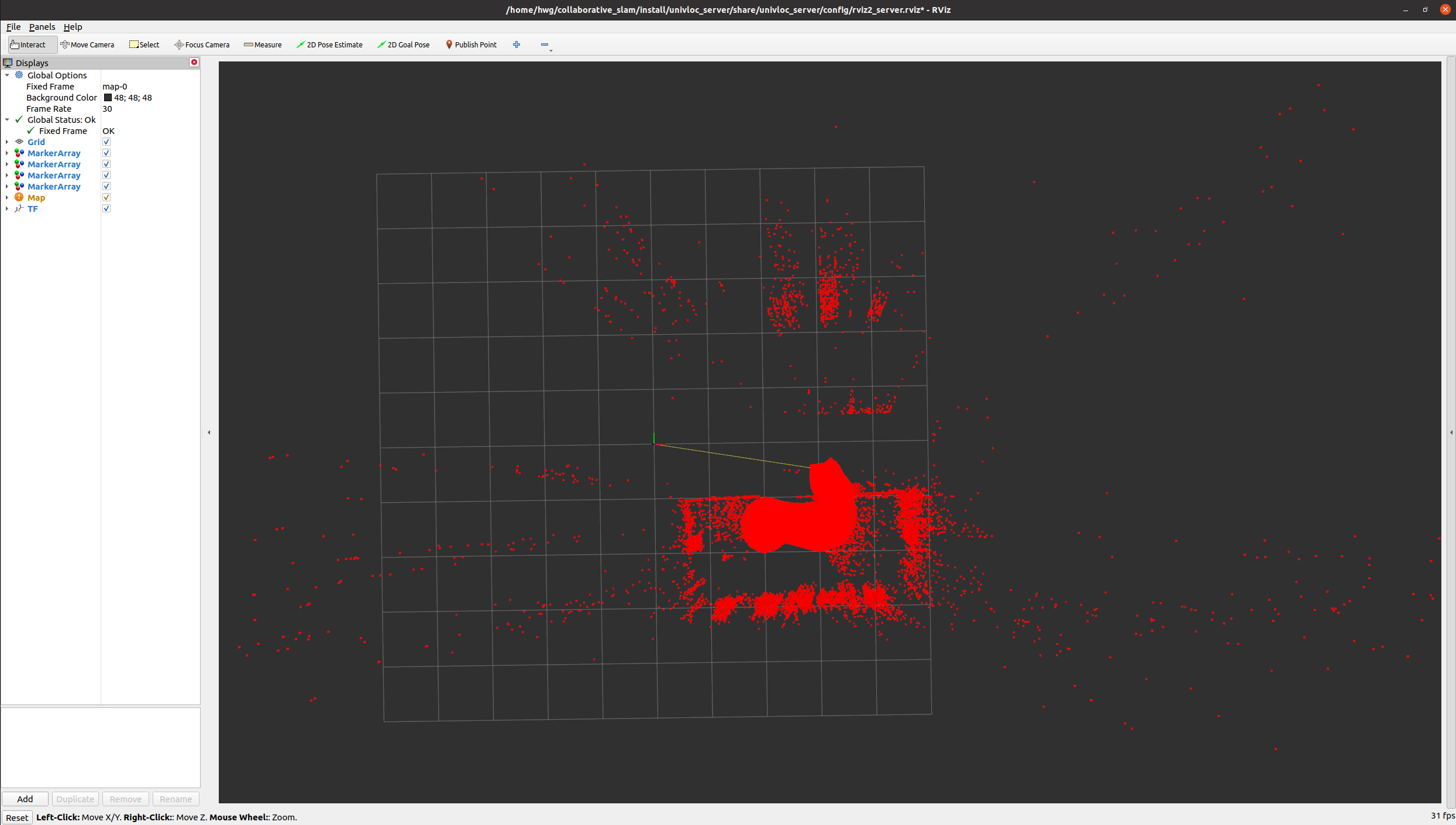

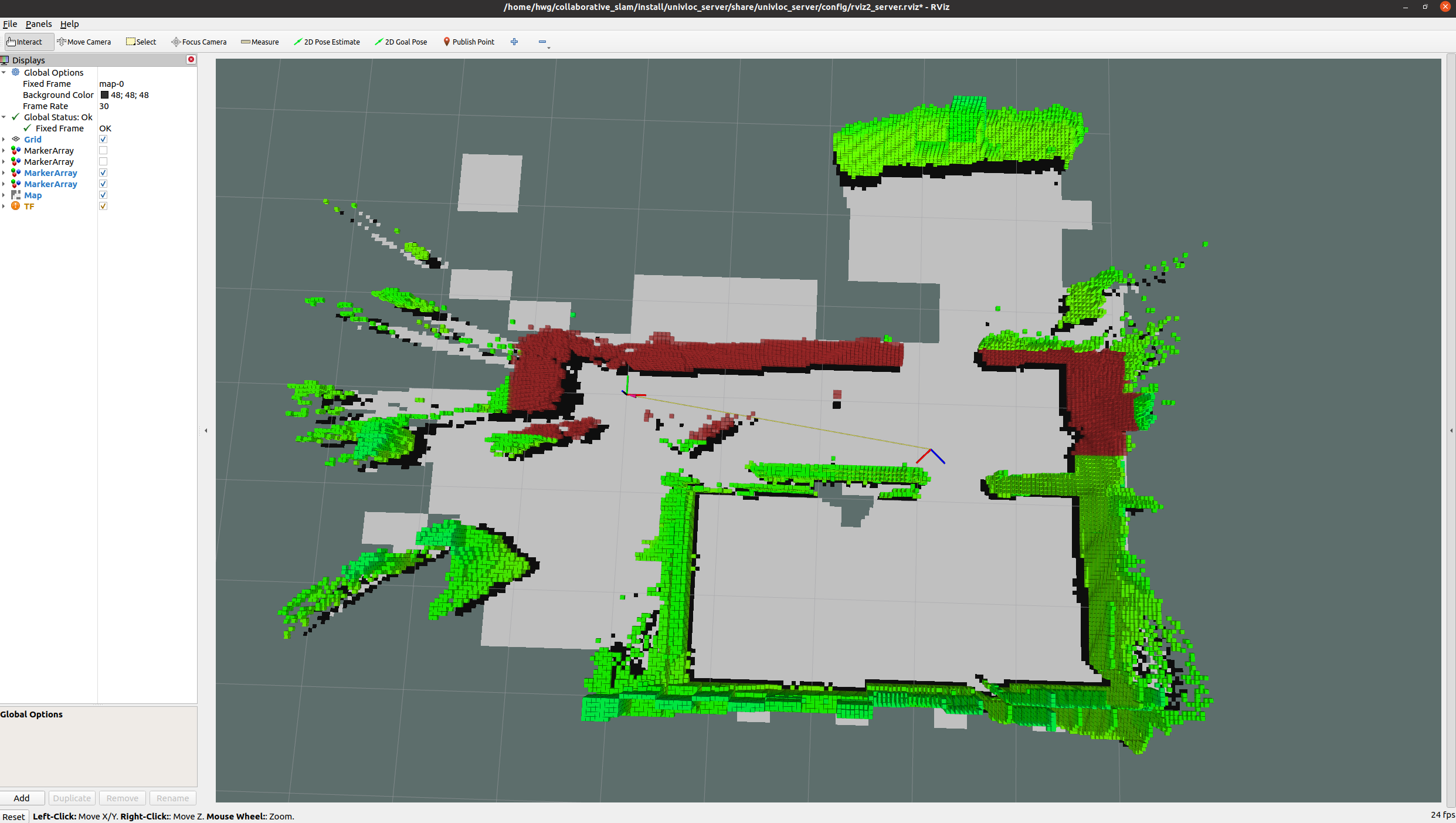

Expected result: On the server rviz2, both trackers are seen.

Red indicates the path robot 1 is taking right now.

Blue indicates the path robot 2 took.

Green indicates the points known to the server.

You may stop execution of the script any time by pressing CTRL-C. This tutorial demo is complete when the output to the console indicates that no further images are being processed. (hint: look for the output, “got 0 images in past 3.0s”). Press CTRL-C when you see this to stop the executing script.

Collaborative Visual SLAM with FastMapping Enabled¶

To download and install the tutorial run the command below:

Note: In this installation package, there is a substantial ROS 2 bag file, which is approximately 6.8 GB in size.

Run the collaborative visual SLAM algorithm with FastMapping enabled:

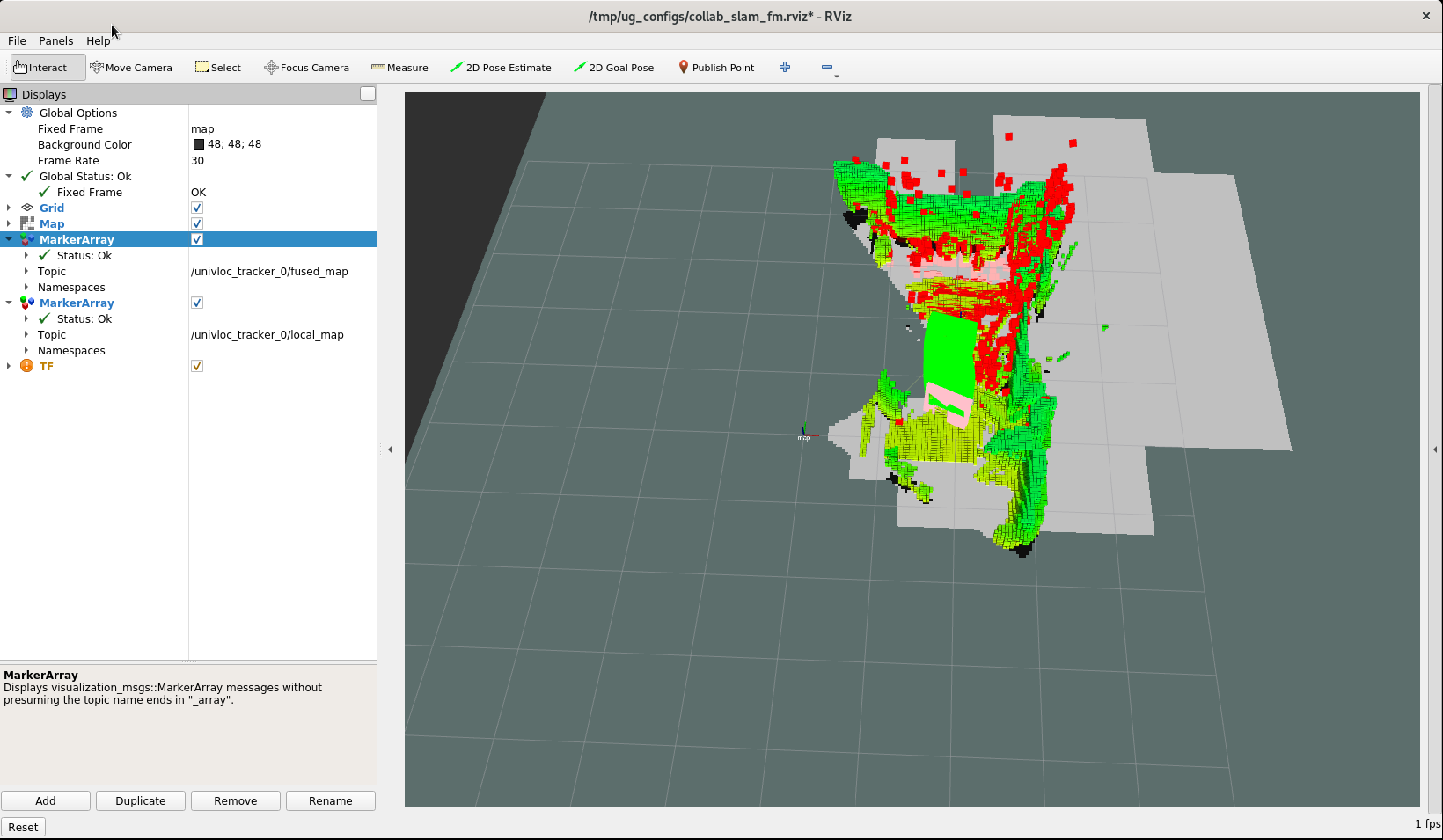

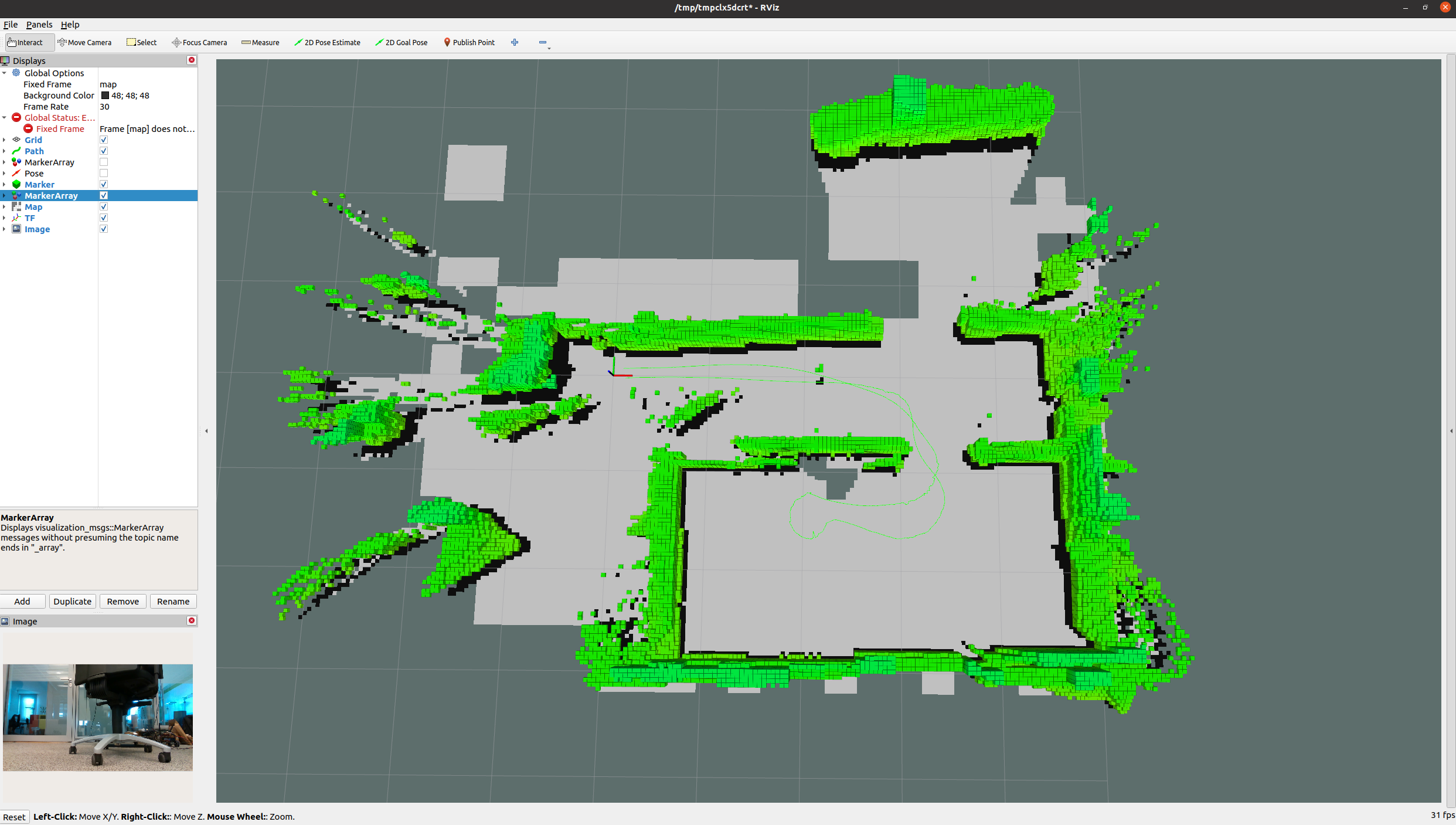

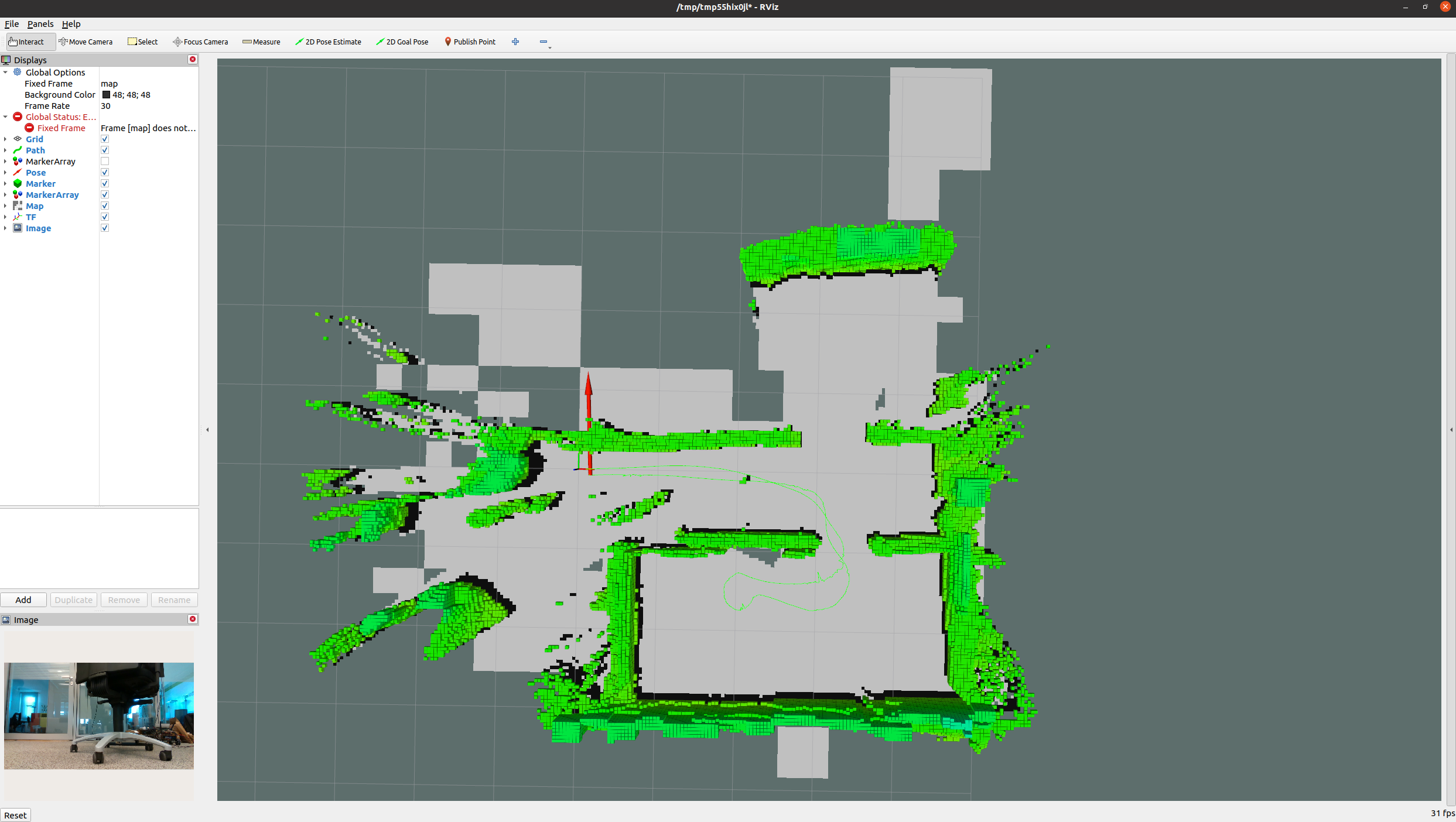

Expected result: On the opened rviz2, you see the visual SLAM keypoints, the 3D map, and the 2D map.

You can disable the

/univloc_tracker_0/local_map,univloc_tracker_0/fused_map, or both topics.Visible Test: Showing keypoints, the 3D map, and the 2D map

Expected Result:

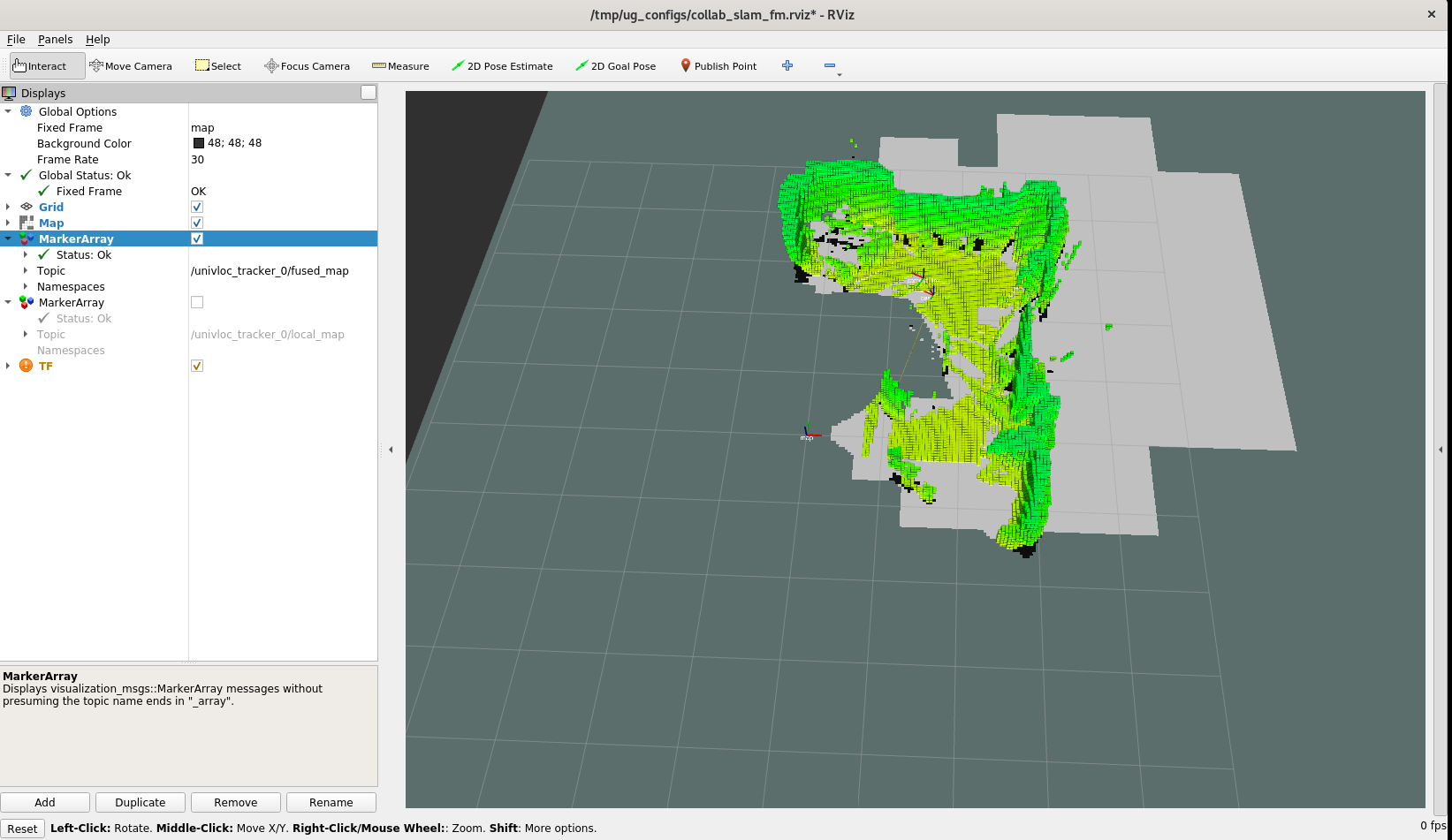

Visible Test: Showing the 3D map

Expected Result:

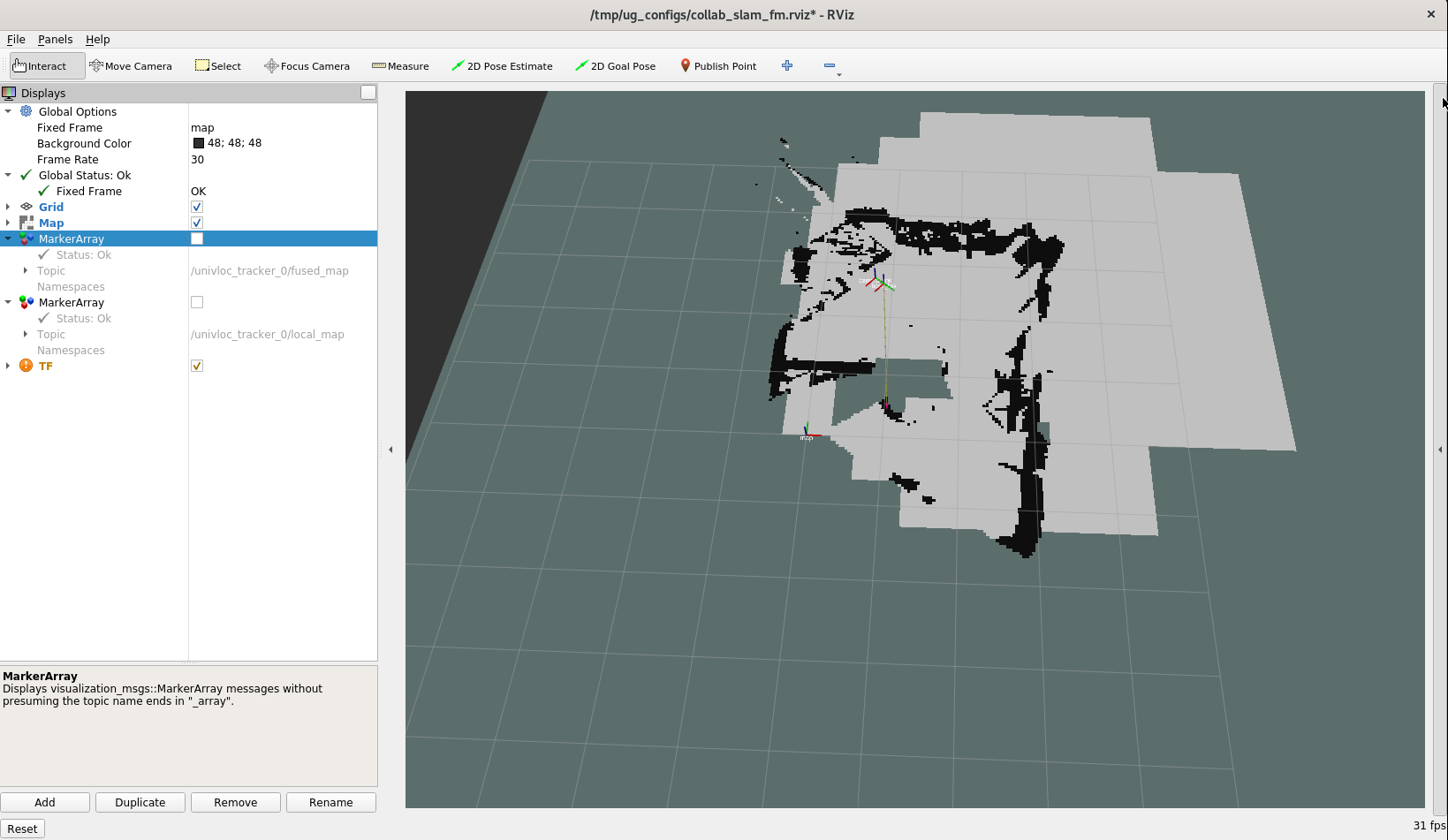

Visible Test: Map showing the 2D map

Expected Result:

Visible Test: Showing keypoints and the 2D map

Expected Result:

You may stop execution of the script any time by pressing CTRL-C. This tutorial demo is complete when the output to the console indicates that no further images are being processed. (hint: look for the output, “got 0 images in past 3.0s”). Press CTRL-C when you see this to stop the executing script.

Collaborative Visual SLAM with Multi-Camera Feature¶

Note: The following part illustrates part of the multi-camera feature in Collaborative SLAM that uses Kalman Filter to fuse SLAM poses from different trackers in a loosely-coupled manner, and we treat each individual camera as a separate tracker (ROS 2 node). For other parts of the multi-camera feature, they are not yet ready and will be integrated later.

To download and install the tutorial run the command below:

Note: In this installation package, there is a substantial ROS 2 bag file, which is approximately 206 MB in size.

Run the collaborative visual SLAM algorithm tracker frame-level pose fusion using Kalman Filter:

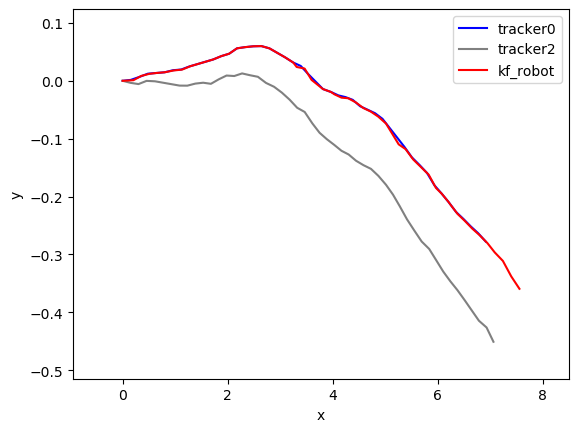

Expected result: On the opened rviz windows, you see the pose trajectory outputs for each camera.

You may stop execution of the script any time by pressing CTRL-C. This tutorial demo is complete when the output to the console indicates that no further images are being processed. (hint: look for the output, “got 0 images in past 3.0s”). Press CTRL-C when you see this to stop the executing script.

Afterwards, run the Python script to visualize the three trajectories obtained from ROS 2 topics:

univloc_tracker_0/kf_pose,univloc_tracker_2/kf_pose,/odometry/filtered.Expected result: On the Python window, three trajectories are shown. An example image is as follows:

Blue indicates the trajectory generated by front camera.

Gray indicates the trajectory generated by rear camera.

Red indicates the fused trajectory generated by Kalman Filter.

The trajectory from Kalman Filter should be the fused result of the other two trajectories indicating the multi-camera pose fusion is working properly.

You may stop execution of the Python script any time by closing the chart window.

Collaborative Visual SLAM with 2D Lidar Enabled¶

To download and install the tutorial run the command below:

Note: In this tutorial installation, there is a substantial ROS 2 bag file, which is approximately 3.7 GB in size.

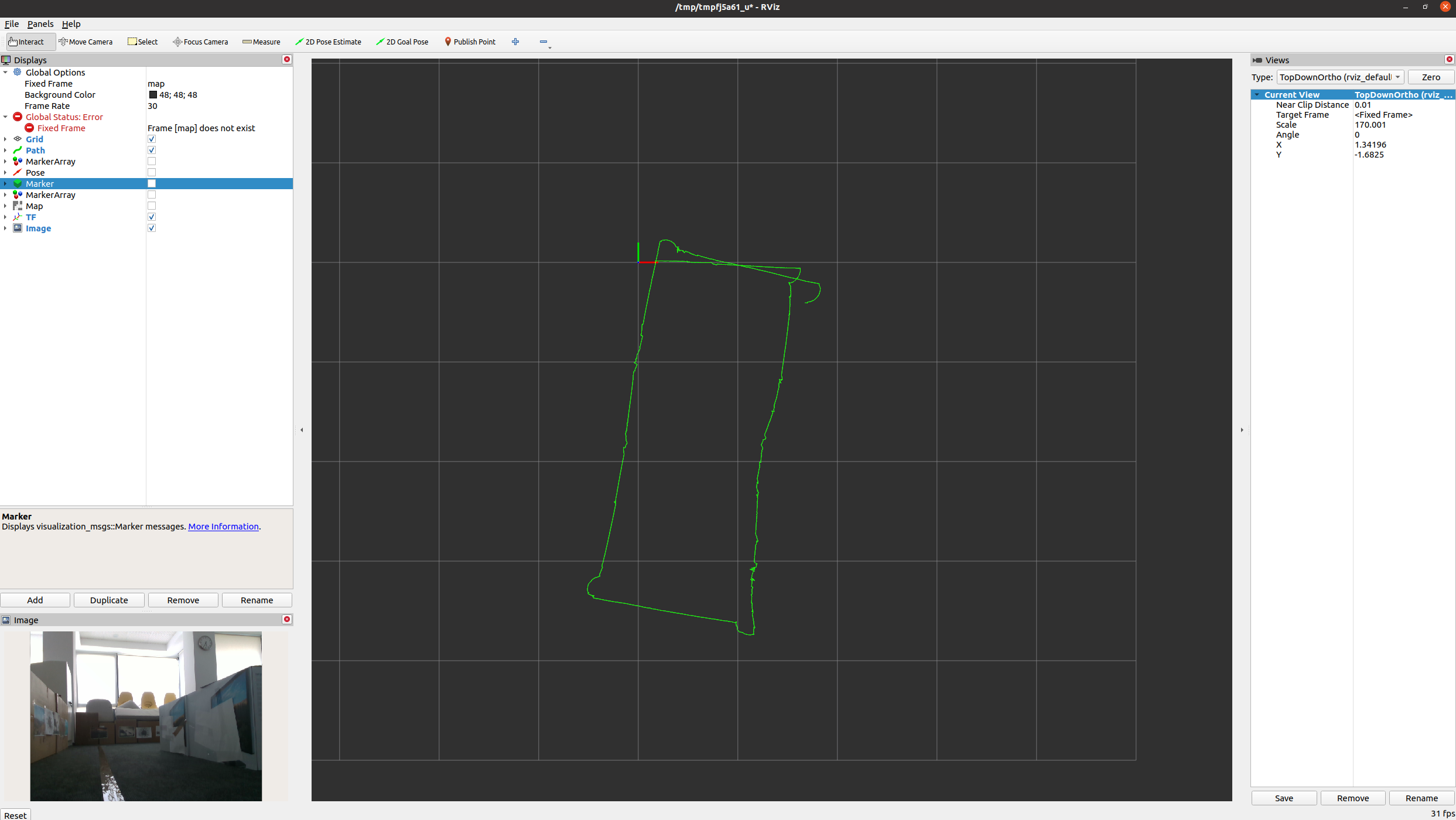

Run the collaborative visual SLAM algorithm with auxiliary Lidar data input:

Use a separate terminal to debug and capture the output ROS 2 topic. You can check if certain topic has been published and view its messages.

Expected result: the values of

pose_failure_countandfeature_failure_countshould not be 0, since they are the default values and should increase over time. On the opened rviz, you see the pose trajectory when Lidar data is used.

You may stop execution of the script any time by pressing CTRL-C. This tutorial demo is complete when the output to the console indicates that no further images are being processed. (hint: look for the output, “got 0 images in past 3.0s”). Press CTRL-C when you see this to stop the executing script.

Collaborative Visual SLAM with Region-wise Remapping Feature¶

To download and install the tutorial run the command below:

Note: In this tutorial installation, there is a substantial ROS 2 bag file, which is approximately 2.6 GB in size.

Run the collaborative visual SLAM algorithm tracker frame-level pose fusion using Kalman Filter:

Expected result: On the opened server rviz, you see the keyframe and landmark constructed in mapping mode.

On the opened tracker rviz, you see the 3D octree map constructed in mapping mode.

You may stop execution of the script any time by pressing CTRL-C. This tutorial demo is complete when the output to the console indicates that no further images are being processed. (hint: look for the output, “got 0 images in past 3.0s”). Press CTRL-C when you see this to stop the executing script.

Run the collaborative visual SLAM algorithm in remapping mode to load and update pre-constructed keyframe/landmark and 3D octree map:

Expected result: On the opened server rviz, you see the loaded pre-constructed keyframe/landmark map in mapping mode. Within the remapping region, corresponding map will be deleted.

On the opened tracker rviz, initially you see the loaded 3D octree map.

On the opened tracker rviz, after bag playing is done, you see the 3D octree map inside the remapping region will be updated.

You may stop execution of the script any time by pressing CTRL-C. This tutorial demo is complete when the output to the console indicates that no further images are being processed. (hint: look for the output, “got 0 images in past 3.0s”). Press CTRL-C when you see this to stop the executing script.

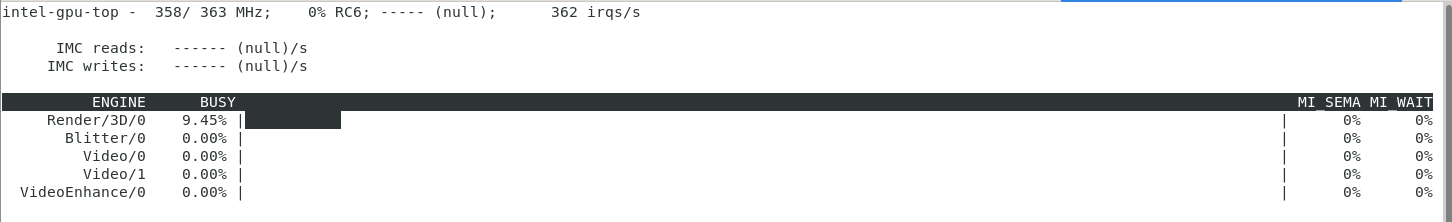

Collaborative Visual SLAM with GPU Offloading¶

With Intel GPU Level-Zero accelerated package for Collaborative SLAM installed, it is possible to check GPU usage while a tutorial is actively executing.

In a terminal, check how much of the GPU is using

intel-gpu-top.

Troubleshooting¶

IMU functionality does not currently work properly for the AVX2 and GPU Level-Zero accelerated packages. Please use the SSE-only version of Collaborative SLAM for IMU.

The odometry feature

use_odom:=truedoes not work with these bags.The ROS 2 bags used in this example do not have the necessary topics recorded for the odometry feature of collaborative visual SLAM.

If the

use_odom:=trueparameter is set, thecollab-slamreports errors.For general robot issues, go to: Troubleshooting for Robotics SDK Tutorials .