Follow-me Algorithm with Gesture-based Control¶

Follow-me Algorithm is a Robotics SDK application for following a target person where the movement of

the robot can be controlled by the target’s hand gestures. It takes point cloud sensor (2D Lidar/depth camera)

as well as RGB camera image as inputs. These inputs are passed through Intel®-patented Adaptive DBScan and a deep-learning-based

gesture recognition pipeline, respectively to publish motion command messages for a differential drive robot. This is a multi model

application combining an unsupervised object clustering and deep-learning gesture recognition model, yet computationally very light-weight

and hence, edge-friendly. The ADBScan algorithm can run at 120 fps at Intel® Tiger Lake processor with

2D Lidar data.

This tutorial describes how to launch a demo of this application in Gazebo simulator.

Getting Started¶

Install Deb package¶

Install ros-humble-followme-turtlebot3-gazebo Deb package from Intel® Robotics SDK APT repository.

Install Python Modules¶

This application uses Mediapipe Hands Framework for hand gesture recognition. Please install the following modules as a prerequisite for the framework:

Run Demo with 2D Lidar¶

Run the following script to launch gazebo simulator and rviz.

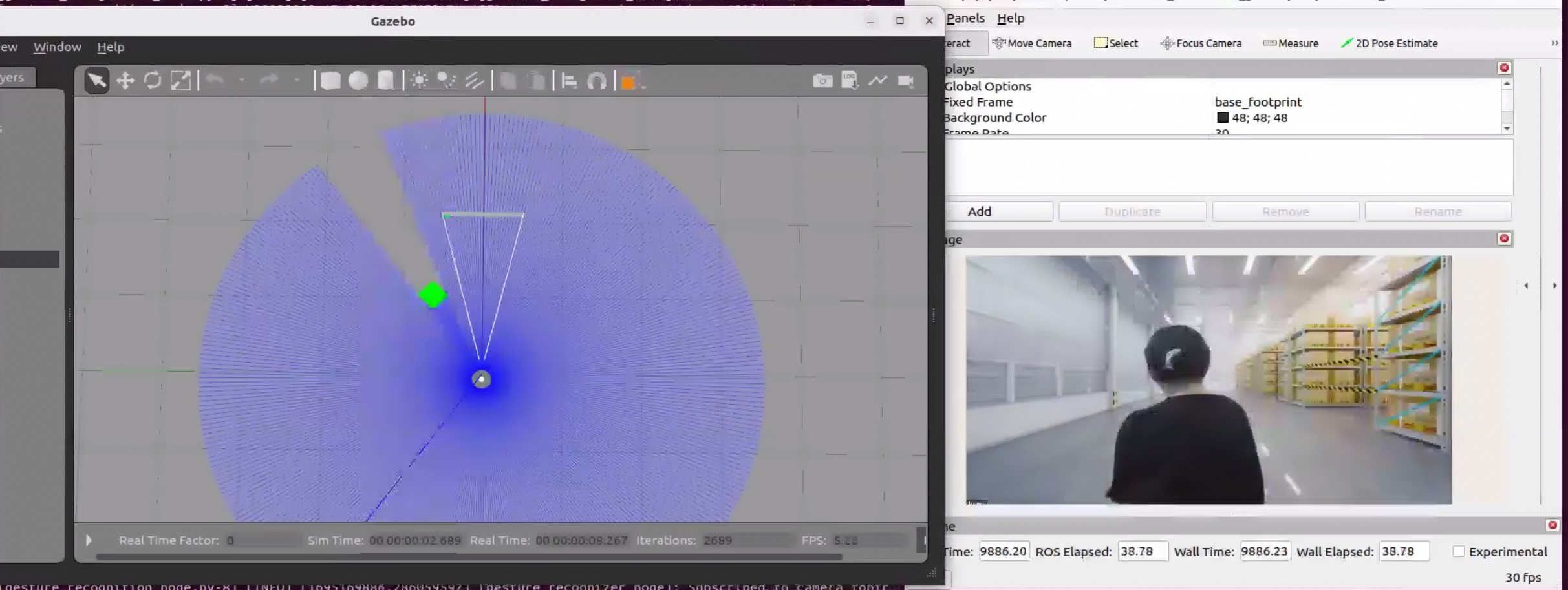

You will view two panels side-by-side: Gazebo GUI on the left and ROS 2 rviz display on the right

The green square robot is a guide robot (namely, the target), which will follow a pre-defined trajectory.

Gray circular robot is a turtlebot3, which will follow the guide robot. turtlebot3 is equipped with a 2D Lidar and Intel® RealSense™ depth camera. In this demo, the 2D Lidar is the input topic.

The start condition for activating follow-me algorithm is

The target (guide robot) will be within the tracking radius (0.5 - 0.7 meters, a reconfigurable parameter) of the turtlebot and

The gesture (visualized in the

/imagetopic in rviz) of the target isthumbs up

The stop condition for the turtlebot3 is

The target (guide robot) moves to a distance of more than the tracking radius (0.5 - 0.7 meters, a reconfigurable parameter) from the turtlebot or

The gesture (visualized in the

/imagetopic in rviz) of the target isthumbs down

Run Demo with Intel® RealSense™ Camera¶

Run the following commands in three terminals one by one

Terminal 1: This command will open gazebo simulator and rviz

Terminal 2: This command will run the

adbscanpackage (It runs Intel®-patented unsupervised clustering algorithm, ADBScan on the point cloud data to detect the location of the target)Terminal 3: This command will run the gesture recognition package

In this demo, Intel® RealSense™ camera of the turtlebot is selected as input. After running all of the above commands, you will observe similar behavior of the turtlebot and guide robot in the gazebo GUI as in Run Demo with 2D Lidar

Note

There are reconfigurable parameters in /opt/ros/humble/share/followme_turtlebot3_gazebo/config/ directory for both LIDAR (adbscan_sub_2D.yaml) and realsense camera (adbscan_sub_RS.yaml). The user can modify parameters depending on the respective robot, sensor configuration and environments (if required) before running the tutorial. Find a brief description of the parameters in the following table.

Lidar_type |

Type of the pointcloud sensor. For realsense camera and LIDAR inputs, the default value is set to RS and 2D, respectively. |

Lidar_topic |

Name of the topic publishing pointcloud data |

Verbose |

If this flag is set to True, detected target object locations will be printed as screen log output. |

subsample_ratio |

This is the downsampling rate of the original pointcloud data. Default value = 15 (i.e. every 15-th data in original pointcloud is sampled and passed to the core adbscan algorithm) |

x_filter_back |

Pointcloud data with x-coordinate > x_filter_back are filtered out (positive x direction lies in front of the robot) |

y_filter_left, y_filter_right |

Pointcloud data with y-coordinate > y_filter_left and y-coordinate < y_filter_right are filtered out (positive y-direction is to the left of robot and vice versa) |

Z_based_ground_removal |

Pointcloud data with z-coordinate > Z_based_ground_removal will be filtered out. |

base, coeff_1, coeff_2, scale_factor |

These are the coefficients used to calculate adaptive parameters of the adbscan algorithm. These values are pre-computed and recommended to keep unchanged. |

init_tgt_loc |

This value describes the initial target location. The person needs to be at a distance of init_tgt_loc in front of the robot to initiate the motor. |

max_dist |

This is the maximum distance that the robot can follow. If the person moves at a distance > max_dist, the robot will stop following. |

min_dist |

This value describes the safe distance the robot will always maintain with the target. If the person moves closer than min_dist, the robot stops following. |

max_linear |

Maximum linear velocity of the robot |

max_angular |

Maximum angular velocity of the robot |

max_frame_blocked |

The robot will keep following the target for max_frame_blocked number of frames in the event of a temporary occlusion. |

Troubleshooting¶

Failed to install Deb package: Please make sure to run

sudo apt updatebefore installing the necessary Deb packages.You can stop the demo anytime by pressing

ctrl-C. If thegazebosimulator freezes or does not stop, please use the following command in a terminal: