Follow-me Introduction¶

Follow-me is a Robotics SDK application for following a target person with point cloud sensor (2D Lidar/depth camera) as inputs. These inputs are passed to Intel®-patented Adaptive DBScan algorithm to detect the location of the target and subsequently, publish motion command messages for a differential drive robot. This is a computationally lightweight application where the core clustering method, namely ADBScan can run at 120 fps at Intel® Tiger Lake processor with 2D Lidar data. This tutorial describes how to launch a demo of this application with input point clouds published by recorded ROS 2 bag files.

Getting Started¶

Install Deb package¶

Install ros-humble-adbscan-ros2-follow-me Deb package from Intel® Robotics SDK APT repository

Install the following package with ROS 2 bag files in order to publish point cloud data from LIDAR or Intel® RealSense™ camera

Run Demo with 2D Lidar¶

Run the following script to run the demo with 2D LIDAR

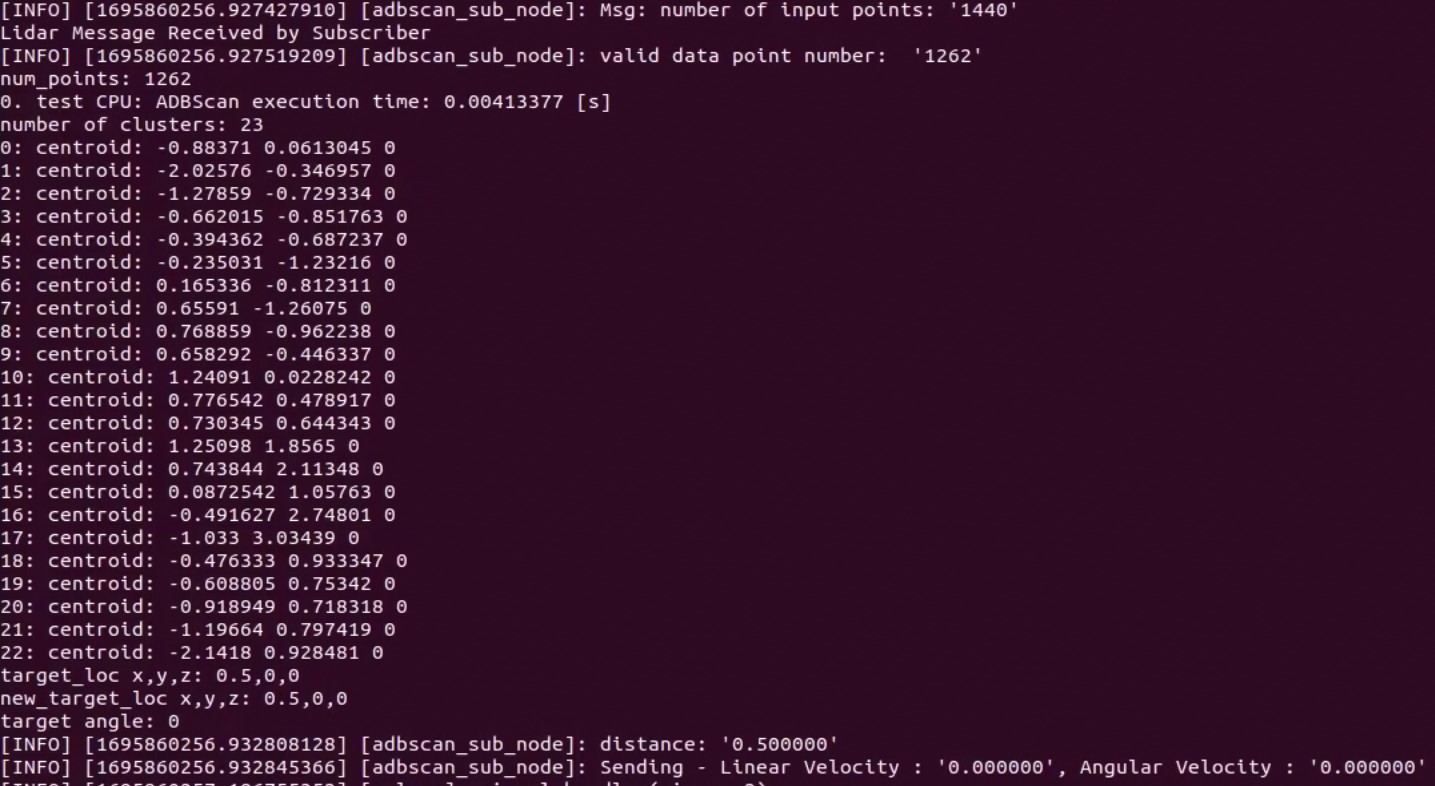

You will see the following information on the screen:

It displays the number of valid data points in the ROS 2 bag file, the centroid of the detected clusters, as well as the published linear and angular velocity of the robot.

Run Demo with Intel® RealSense™ Camera¶

Run the following script to run the demo with Intel® RealSense™ camera input

You will observe similar output as Run Demo with 2D Lidar

One can launch a robot driver package to subscribe to the twist msg published by the follow-me

application and subsequently, observe the robot in action (following a target person).

We validated the application with a Pengo robot where the follow-me algorithm is executed on an

Intel® TigerLake processor. Please find the demo videos below:

Demo 1 with Lidar |

|

Demo 2 with Lidar |

|

Demo 1 with Realsense Camera (Also shows temporary occlusion handling) |

|

Demo 2 with Realsense Camera in which robot is following a moving box |

Note

The target needs to be at 0.5 m distance in front of the robot to initiate the tracking in each scenario.

Troubleshooting¶

Failed to install Deb package: Please make sure to run

sudo apt updatebefore installing the necessary Deb packages.You can stop the demo anytime by pressing

ctrl-C.