How it Works¶

The Intel® Robotics SDK (Robotics SDK) modules are deployed as Deb packages for enhanced Developer Experience (DX), support of Continuous Integration and Continuous Deployment (CI/CD) practices and flexible deployment in different execution environments, including robot, development PC, server, and cloud.

Modules and Services¶

The middleware layered architecture in the Intel® oneAPI Base Toolkit (oneAPI™) and Intel® Distribution of OpenVINO™ toolkit (OpenVINO™) abstracts hardware dependencies from the algorithm implementation.

The ROS 2 with data distribution service (DDS) is used as a message bus. This Publisher-Subscriber architecture based on ROS 2 topics decouples data providers from consumers.

Camera and LIDAR sensor data is abstracted with ROS 2 topics.

Video streaming processing pipelines are supported by GStreamer*. GStreamer* is a library for constructing graphs of media-handling components. It decouples sensor ingestion, video processing and AI object detection via OpenVINO™ toolkit DL Streamer framework. The applications it supports range from simple Ogg Vorbis playback audio and video streaming to complex audio (mixing) and video (non-linear editing) processing.

More complex computational graphs that decouple sense-plan-act AMR applications can be implemented using ROS 2 topic registration.

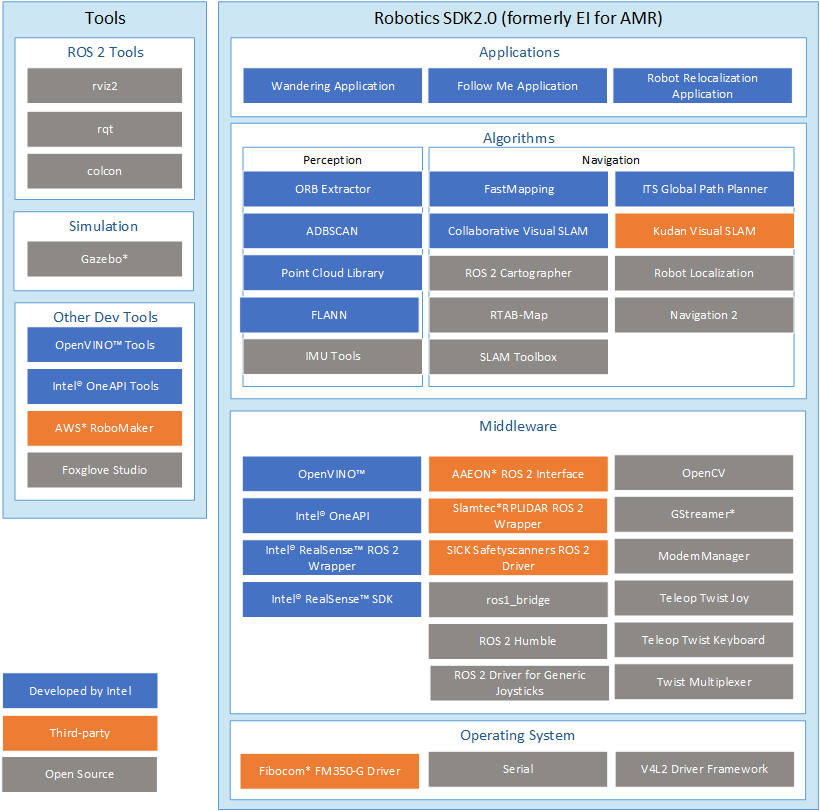

This diagram shows the software components included in the Robotics SDK package.

The Robotics SDK software stack is based on software supported by and part of the underlying hardware platform, their respective Unified Extensible Firmware Interface (UEFI) based boot, and their supported Linux* operating system. For requirement details, see Recommended Hardware.

Robotics SDK Drivers¶

Intel® Robotics SDK relies on standard Intel® Architecture Linux* drivers included and upstreamed in the Linux* kernel from kernel.org and included in Ubuntu* distributions. These drivers are not included in the Robotics SDK package. Some notable drivers that are specifically important for Robotics SDK include:

Video4Linux2 Driver Framework, a collection of device drivers and an API for supporting realtime video capture on Linux* systems (It supports many USB webcams, TV tuners, and related devices, standardizing their output, so that programmers can easily add video support to their applications.)

Serial, the serial stream as used in Ethernet and USB interfaces

Tools¶

ROS 2 Tools¶

Intel® Robotics SDK is validated using ROS 2 nodes. ROS 1 is not compatible with Robotics SDK components. A ROS 1 bridge is included to allow Robotics SDK components to interface with ROS 1 components.

From the hardware perspective of the supported platforms, there are no known limitations for ROS 1 components.

For information on porting ROS 1 applications to ROS 2, here is a guide from the ROS community.

Intel® Robotics SDK includes:

rqt, a software framework of ROS 2 that implements the various GUI tools in the form of plugins

rviz2, a tool used to visualize ROS 2 topics

colcon (collective construction), a command line tool to improve the workflow of building, testing, and using multiple software packages (It automates the process, handles the ordering, and sets up the environment to use the packages.)

Simulation Tools¶

The Gazebo* robot simulator, making it possible to rapidly test algorithms, design robots, perform regression testing, and train AI systems using realistic scenarios. Gazebo offers the ability to simulate populations of robots accurately and efficiently in complex indoor and outdoor environments

An industrial simulation room model for Gazebo*, the Open Source Robotics Foundation (OSRF) Gazebo Environment for Agile Robotics (GEAR) workcell that was used for the ARIAC competition in 2018

Other Tools¶

Intel® oneAPI Base Toolkit, which includes the DPC++ compiler and compatibility tool, as well as debugging and profiling tools like the VTune™ Profiler (formerly known as Intel System Studio)

OpenVINO™ Tools, including the model optimization tool

AWS* RoboMaker, a cloud-based simulation service that enables robotics developers to run, scale, and automate simulation without managing any infrastructure

Foxglove Studio, interactive visualizations to analyze live connections and pre-recorded data

Robotics SDK Applications¶

Wandering Application, included in the Robotics SDK SDK to demonstrate the combination of the middleware, algorithms, and the ROS 2 navigation stack to move a robot around a room while avoiding hitting obstacles, update a local map in real time exposed as a ROS topic, and publish AI-based objects detected in another ROS topic (It uses the robot’s sensors and actuators that are available from the robot’s hardware configuration.)

Object Detection AI Application, detects objects in video data using a deep learning neural network model from the OpenVINO™ Model Zoo

Robotics SDK Algorithms¶

Robotics SDK includes reference algorithms as well as deep learning models as working examples of automated robot control functions.

Open Model Zoo for OpenVINO™¶

The Open Model Zoo for OpenVINO™ toolkit delivers optimized deep learning models and a set of demos to expedite development of high-performance deep learning inference applications. You can use these pre-trained models instead of training your own models to speed up the development and production deployment process.

For details, see Model Zoo.

ADBSCAN¶

Tutorial: ADBSCAN Algorithm

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is an unsupervised clustering algorithm that clusters high dimensional points based on their distribution density. Adaptive DBSCAN (ADBSCAN) has clustering parameters that are adaptive based on range and are especially suitable for processing LIDAR data. It improves the object detection range by 20-30% on average.

Point Cloud Library (PCL)¶

Tutorial: Point Cloud Library (PCL) Optimized for the Intel® oneAPI Base Toolkit

The Point Cloud Library (PCL), a standalone, large scale, open project for 2D/3D image and point cloud processing (see also https://pointclouds.org/). The Robotics SDK SDK version of PCL adds optimized implementations of several PCL modules which allow you to offload computation to a GPU.

ROS 2 Depth Image to Laser Scan¶

ROS 2 Depth Image to Laser Scan, which converts a depth image to a laser scan for use with navigation and localization.

IMU Tools¶

IMU Tools - filters and visualizers - from https://github.com/CCNYRoboticsLab/imu_tools:

imu_filter_madgwick: A filter which fuses angular velocities, accelerations, and (optionally) magnetic readings from a generic IMU device into an orientation.

imu_complementary_filter: A filter which fuses angular velocities, accelerations and (optionally) magnetic readings from a generic IMU device into an orientation quaternion using a novel approach based on a complementary fusion.

rviz_imu_plugin: A plugin for rviz which displays sensor_msgs::Imu messages.

FastMapping¶

Tutorial: FastMapping Algorithm

FastMapping, which is an algorithm to create a 3D voxel map of a robot’s surrounding, based on Intel® RealSense™ depth sensor data.

Collaborative Visual SLAM¶

Tutorial: Collaborative Visual SLAM

Collaborative visual SLAM, a collaborative visual simultaneous localization and mapping (SLAM) framework for service robots. With an edge server maintaining a map database and performing global optimization, each robot can register to an existing map, update the map, or build new maps, all with a unified interface and low computation and memory cost. A collaborative visual SLAM system consists of at least two elements:

The tracker is a visual SLAM system with support for inertial and odometry input. It estimates the camera pose in real-time, and maintains a local map. It can work without a server, but if it has one configured, it communicates with the server to query and update the map. The tracker represents a robot. There can be multiple trackers running at the same time.

The server maintains the maps and communicates with all trackers. For each new keyframe from a tracker, it detects possible loops, both intra-map and inter-map. Once detected, the server performs map optimization or map merging and distributes the updated map to corresponding trackers.

For collaborative visual SLAM details, refer to A Collaborative Visual SLAM Framework for Service Robots paper.

ROS 2 Cartographer¶

ROS 2 Cartographer, a system that provides real-time simultaneous localization and mapping (SLAM) based on real-time 2D LIDAR sensor data. It is used to generate as-built floor plans in the form of occupancy grids.

RTAB-Map¶

Tutorials: Wandering Application in Simulations

RTAB-Map (Real-Time Appearance-Based Mapping), a RGB-D, Stereo and Lidar Graph-Based SLAM approach based on an incremental appearance-based loop closure detector. The loop closure detector uses a bag-of-words approach to determinate how likely a new image comes from a previous location or a new location. When a loop closure hypothesis is accepted, a new constraint is added to the map’s graph, then a graph optimizer minimizes the errors in the map. A memory management approach is used to limit the number of locations used for loop closure detection and graph optimization, so that real-time constraints on large-scale environments are always respected. RTAB-Map can be used alone with a handheld Kinect, a stereo camera or a 3D lidar for 6DoF mapping, or on a robot equipped with a laser rangefinder for 3DoF mapping.

SLAM Toolbox¶

The SLAM toolbox is a set of tools and capabilities for 2D SLAM built by Steve Macenski that includes the following:

Starting, mapping, saving pgm files, and saving maps for 2D SLAM mobile robotics

Refining, remapping, or continue mapping a saved (serialized) pose-graph at any time

Loading a saved pose-graph continue mapping in a space while also removing extraneous information from newly added scans (life-long mapping)

An optimization-based localization mode built on the pose-graph. Optionally run localization mode without a prior map for “LIDAR odometry” mode with local loop closures

Synchronous and asynchronous modes of mapping

Kinematic map merging (with an elastic graph manipulation merging technique in the works)

Plugin-based optimization solvers with an optimized Google* Ceres-based plugin

rviz2 plugin for interacting with the tools

Graph manipulation tools in rviz2 to manipulate nodes and connections during mapping

Map serialization and lossless data storage

ITS Global Path Planner¶

Tutorial: ITS Path Planner ROS 2 Navigation Plugin

Intelligent Sampling and Two-Way Search (ITS) global path planner Robot Operating System 2 (ROS 2) Plugin is a plugin for ROS 2 Navigation package which conducts a path planning search on a roadmap from two directions simultaneously. The main inputs are 2D occupancy grid map, robot position, and the goal position. The occupancy is converted into a roadmap and can be saved for future inquiries. The output is a list of waypoints which constructs the global path. All inputs and outputs are in standard ROS 2 formats. This plugin is a global path planner module which is based on the Intelligent Sampling and Two-Way Search (ITS). Currently, the ITS plugin does not support continuous replanning. To use this plugin, a simple behavior tree with compute path to pose and follow path should be used. The inputs for the ITS planner are global 2d_costmap (nav2_costmap_2d::Costmap2D), start and goal pose (geometry_msgs::msg::PoseStamped). The outputs are 2D waypoints of the path. The ITS planner gets the 2d_costmap and it converts it to either Probabilistic Road Map (PRM) or Deterministic Road Map (DRM). The generated roadmap is saved in a txt file which can be reused for multiple inquiries. Once a roadmap is generated, the ITS conducts a two-way search to find a path from the source to destination. Either the smoothing filter or catmull spline interpolation can be used to create a smooth and continuous path. The generated smooth path is in the form of ROS navigation message type (nav_msgs::msg).

Kudan Visual SLAM¶

Tutorial: Kudan Visual SLAM

Kudan Visual SLAM (KdVisual), Kudan’s proprietary visual SLAM software, has been extensively developed and tested for use in commercial settings. Open source and other commercial algorithms struggle in many common use cases and scenarios. Kudan Visual SLAM achieves much faster processing time, higher accuracy, and a more robust results in dynamic situations.

Robot Localization¶

robot_localization (from https://github.com/cra-ros-pkg/robot_localization),

a collection of state estimation nodes, each of which is an implementation

of a nonlinear state estimator for robots moving in 3D space. It contains two

state estimation nodes, ekf_localization_node and ukf_localization_node. In

addition, robot_localization provides navsat_transform_node, which aids in

the integration of GPS data.

Robotics SDK Middleware¶

Intel® Distribution of OpenVINO™ toolkit, which is a comprehensive toolkit for quickly developing applications and solutions that solve a variety of tasks including emulation of human vision, automatic speech recognition, natural language processing, recommendation systems, and many others. Based on latest generations of artificial neural networks, including Convolutional Neural Networks (CNNs), recurrent and attention-based networks, the toolkit extends computer vision and non-vision workloads across Intel® hardware, maximizing performance. It accelerates applications with high-performance, AI and deep learning inference deployed from edge to cloud.

Intel® oneAPI Base Toolkit, which is able to execute Intel® oneAPI Base Toolkit sample applications. The Intel® oneAPI Base Toolkit is a core set of tools and libraries for developing high-performance, data-centric applications across diverse architectures. It features an industry-leading C++ compiler and the Data Parallel C++ (DPC++) language, an evolution of C++ for heterogeneous computing. For Intel® oneAPI Base Toolkit training, refer to:

Intel® RealSense™ ROS 2 Wrapper node, used for Intel® RealSense™ cameras with ROS 2

Intel® RealSense™ SDK, used to implement software for Intel® RealSense™ cameras

ROS 2 OpenVINO™ Toolkit, which provides a ROS-adapted runtime framework of neural network which quickly deploys applications and solutions for vision inference

AAEON* ROS 2 interface, the ROS 2 driver node for AAEON Robotics SDKs

Slamtec* RPLIDAR ROS 2 Wrapper node, for using RPLIDAR LIDAR sensors with ROS 2

SICK Safetyscanners ROS 2 Driver, which reads the raw data from the SICK Safety Scanners and publishes the data as a laser_scan msg

ROS 2 ros1_bridge, which provides a network bridge allowing the exchange of messages between ROS1 and ROS 2. This lets users evaluate the Robotics SDK SDK on Robotics SDKs or with sensors for which only ROS1 driver nodes exist.

ROS 2, Robot Operating System (ROS), which is a set of open source software libraries and tools for building robot applications

ROS 2 depends on other middleware, like the Object Management Group (OMG) DDS connectivity framework that is using a publish-subscribe pattern (The standard ROS 2 distribution includes eProsima Fast DDS implementation.)

OpenCV (Open Source Computer Vision Library), an open-source library that includes several hundred computer vision algorithms

GStreamer*, which includes support for libv4l2 video sources, GStreamer* “good” plugins for video and audio, and a GStreamer* plugin for display to display a video stream in a window

Teleop Twist Joy, a generic facility for teleoperating twist-based ROS 2 robots with a standard joystick. It converts joy messages to velocity commands. This node provides no rate limiting or auto-repeat functionality. It is expected that you take advantage of the features built into ROS 2 Driver for Generic Joysticks for this.

Teleop Twist Keyboard generic keyboard teleoperation for ROS 2

Twist Multiplexer for when there is more than one source to move a robot with a geometry_msgs::Twist message. It is important to multiplex all input sources into a single source that goes to the Robotics SDK control node.

ROS 2 Driver for Generic Joysticks