Kudan Visual SLAM¶

Introduction¶

This chapter describes how the Kudan Visual SLAM (KdVisual) system can be applied for simultaneous localization and mapping. The Intel® Robotics SDK provides several tutorials which

run the Kudan Visual SLAM system using ROS 2 bags that provide data of a robot exploring an area in a lab environment

run the Kudan Visual SLAM system using the live video stream of an Intel® RealSense™ camera that is mounted on an AAEON UP Xtreme* i11 Robotic Development Kit.

All tutorials apply the ROS 2 tool rviz2 to visualize how the Kudan Visual SLAM system interprets the input data, how it estimates the path of the moving robot, and how it creates the map.

To get more information about Kudan Visual SLAM you can read Kudan’s article “Kudan Visual SLAM (KdVisual) in action: Forklift in a dynamic warehouse”. Find more information on Kudan in general at www.kudan.io.

Install the Kudan Visual SLAM Package and Tutorials¶

To become familiar with Kudan Visual SLAM, we recommend that you start with one of the tutorials. The tutorials might install additional Deb packages with ROS 2 bags that consume a certain amount of disk space.

If you do not need the tutorials, you can install the core package of Kudan Visual SLAM. In this case, no ROS 2 bags will be downloaded to your system.

The Intel® Robotics SDK, its Deb packages for Kudan Visual SLAM, and the related tutorials have been developed and verified on the platforms described in the Requirements section of the Getting Started Guide. To install Ubuntu* 22.04 LTS (Jammy Jellyfish) and ROS 2 Humble, follow the instructions in the Prepare the Target System section of the Getting Started Guide.

Install the Kudan Visual SLAM Core Package¶

To install the core package of Kudan Visual SLAM, run

Open and read the End User License Agreement for Kudan SLAM. The following command applies the Evince document viewer, but you can use every other application that can display PDF documents.

If you agree with the terms of the license agreement, continue with the next steps.

Check that the expiration date of Kudan’s evaluation license satisfies your needs. Contact Kudan if you need a different license.

The Kudan Visual SLAM installation includes additional documentation, which

has been provided by Kudan. To display this documentation, open the file

/opt/ros/humble/share/kdvisual_ros2/docs/html/index.html with your browser.

The core package of Kudan Visual SLAM depends on several other Deb packages,

which are automatically added to your system when you run

the apt-get install command mentioned above. The dependencies are:

Deb package |

Explanation |

|---|---|

|

GPU ORB Extractor package |

|

Kudan Visual SLAM helper package |

|

Kudan Visual SLAM message definitions |

|

Kudan Visual SLAM message definitions |

(further ros-humble packages) |

Install the tutorial with pre-recorded ROS 2 bags¶

To install the tutorial with pre-recorded ROS 2 bags, run

This tutorial depends on several other Deb packages,

which are automatically added to your system when you run

the apt-get install command mentioned above. The dependencies are:

Deb package |

Explanation |

|---|---|

|

Kudan Visual SLAM core package |

|

ROS 2 bag package |

|

rviz2 package |

Install the tutorial with Kudan Visual SLAM, AAEON robotic kit, and Intel® RealSense™ camera¶

Instead of using a pre-recorded ROS 2 bag, this tutorial applies an AAEON UP Xtreme* i11 Robotic Development Kit that is equipped with an Intel® RealSense™ camera. The stream from the Intel® RealSense™ camera serves as the input for the Kudan Visual SLAM system. To install this tutorial, run

This tutorial depends on several other Deb packages,

which are automatically added to your system when you run

the apt-get install command mentioned above. The dependencies are:

Deb package |

Explanation |

|---|---|

|

Kudan Visual SLAM core package |

|

teleoperation node to move your robot by keyboard commands |

|

interface for the motor control board of AAEON robots |

|

interface for Intel® RealSense™ cameras |

|

rviz2 package |

Execute the Tutorials¶

Tutorial with pre-recorded ROS 2 bags from an RGBD camera¶

This section describes how to run the Kudan Visual SLAM system

using a pre-recorded video stream from an Intel® RealSense™ camera. The

tutorial applies the ROS 2 bag robot_moving_15fps. This ROS 2 bag is

part of the Deb package ros-humble-bagfile-moving-15fps.

After you have installed this Deb package, you can find the ROS 2 bag

at /opt/ros/humble/share/bagfiles/moving-15fps.

Install the tutorial as described in the Install the tutorial with pre-recorded ROS 2 bags section. Read the End User License Agreement for Kudan SLAM as described in the Install the Kudan Visual SLAM Core Package section. If you agree with the terms of this document, continue with the next steps.

Setup the ROS 2 environment:

Run the Kudan Visual SLAM algorithm using a ROS 2 bag simulating a robot exploring an area:

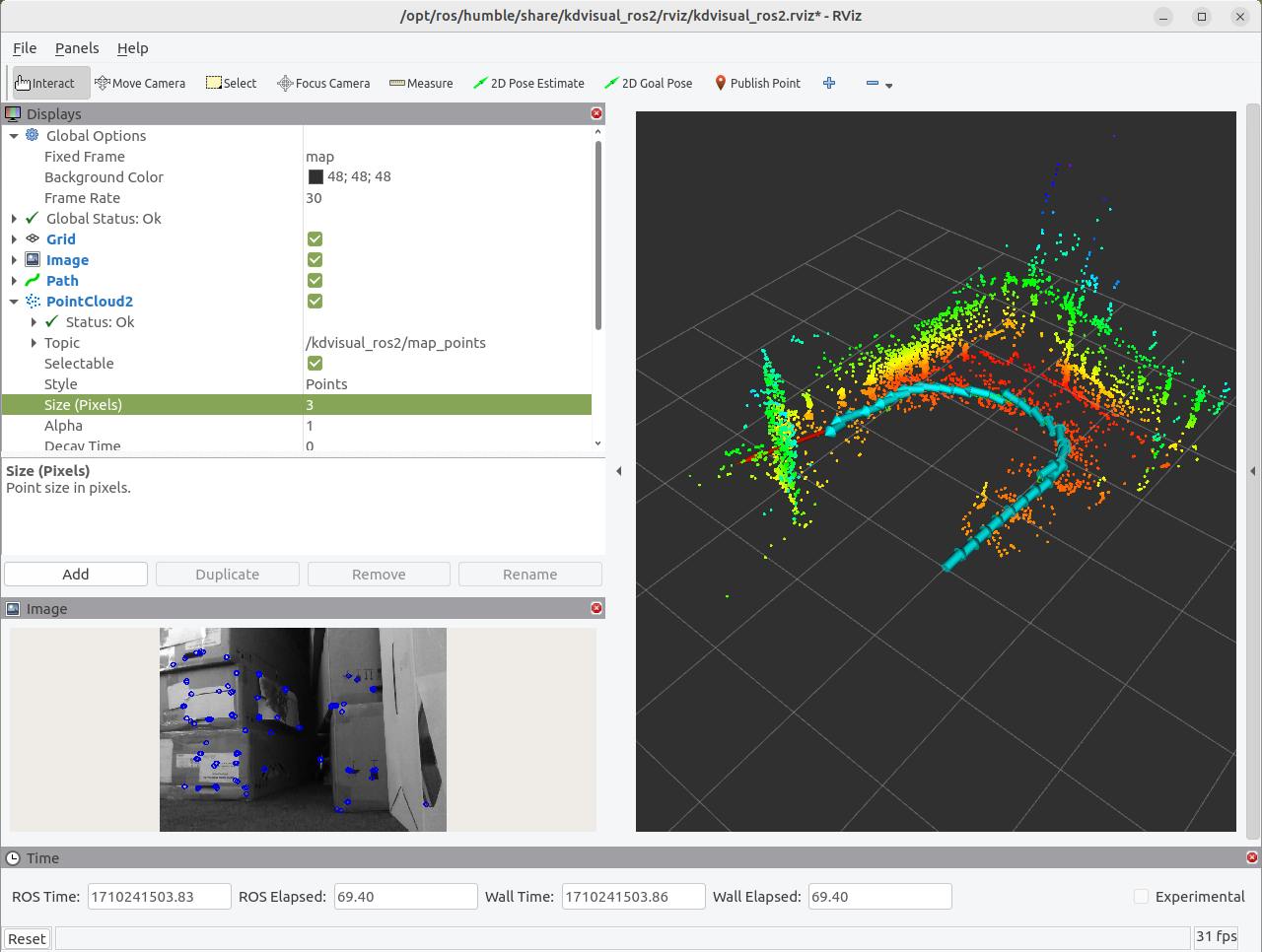

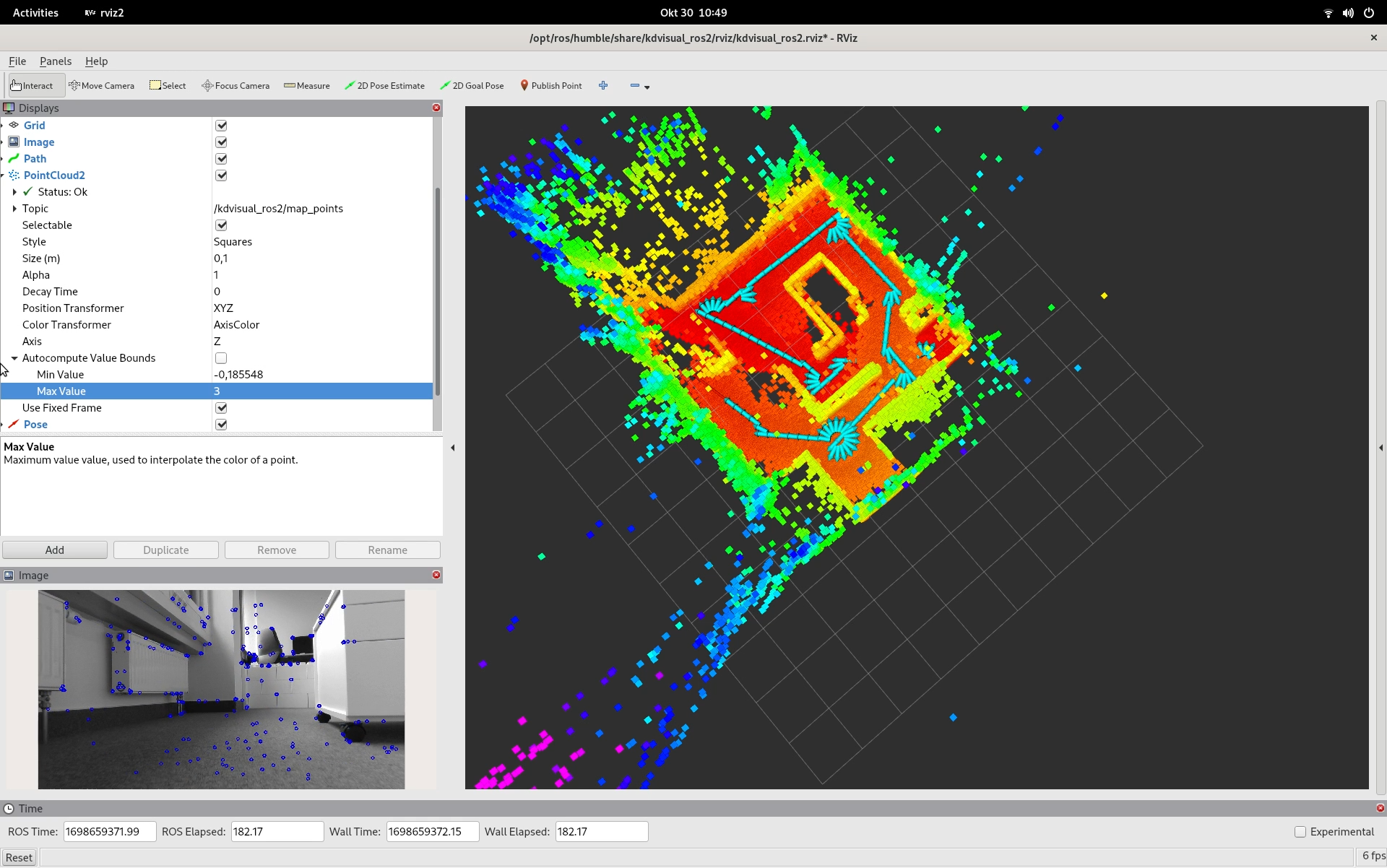

In the rviz2 window, you can see how the Kudan Visual SLAM system estimates the path of the robot and creates the point cloud representing the walls and obstacles within the environment. The rviz2 tool shows:

the current image from the camera,

the features identified by the ORB Extractor (shown as blue dots in the camera window),

the identified walls and obstacles (shown in yellow and green, depending on their height),

the key frames (shown as cyan arrows),

the current estimated pose of the robot (shown as a red arrow, hard to see in the figure below),

the estimated path of the robot (shown in green, hidden by the key frames in the figure below).

To close the tutorial, minimize the rviz2 window, and press Ctrl-c

in the terminal where you have started the script. Wait a few seconds

until all related processes have shut down.

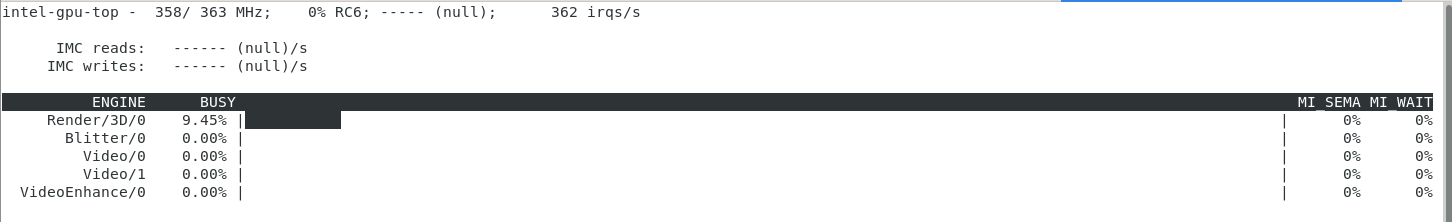

The Kudan Visual SLAM system can also offload some processing to the GPU. To achieve this, the Kudan Visual SLAM system applies the GPU ORB Extractor package that is part of the Intel® Robotics SDK.

To execute the Kudan Visual SLAM system with GPU acceleration in the same environment as used before, you can run:

Note

Offloading to the GPU only works on systems with 11th, 12th, or 13th Generation Intel® Core™ i3/i5/i7 processors with Intel® Iris® Xe Integrated Graphics or Intel® UHD Graphics.

In a different terminal, check how much of the GPU is used.

To analyze the GPU load, you can apply the tool intel_gpu_top, which

is part of the package intel-gpu-tools:

To close the tutorial, minimize the rviz2 window, and press Ctrl-c

in the terminal where you have started the script. Wait a few seconds

until all related processes have shut down.

Tutorial with pre-recorded ROS 2 bags from a stereo camera¶

The Kudan Visual SLAM system can also work on video streams from a stereo camera.

To test the Kudan Visual SLAM system in this mode, you need a video stream

with stereo data. ROS 2 bags with stereo data can be found in the

EuRoC MAV Dataset.

In the following, we use the ROS 2 bag MH_01_easy.bag.

Create a folder EuRoC_MAV_Dataset in your home directory and change

to this folder.

Download the ROS 2 bag MH_01_easy.bag from the “Downloads” section of the

EuRoC MAV Dataset.

Save the file MH_01_easy.bag into the EuRoC_MAV_Dataset folder,

which you have created. Since this file represents a ROS 1 bag, convert

it into a ROS 2 bag by means of these commands:

If everything went well, your directory tree looks like this:

If not already done, setup the ROS 2 environment:

To run the Kudan Visual SLAM system with a user-provided ROS 2 bag, the

Intel® Robotics SDK provides the script kudan-slam-generic.sh with the

following syntax:

The 1st parameter (

cpuorgpu) specifies whether the ORB feature extraction is performed by the CPU or by the GPU ORB Extractor.The 2nd parameter specifies whether the input stream comes from an RGBD camera (

rgbd) or a stereo camera (stereo). The third option (euroc) is similar tostereo. However, since the ROS 2 bags of the EuRoC data set do not provide camera calibration data, theeurocoption tells the Kudan Visual SLAM system to use its built-in calibration data for the EuRoC data set.The 3rd parameter specifies the folder where the ROS 2 bag is stored.

Run the Kudan Visual SLAM system using the ROS 2 bag with the stereo stream from the EuRoC data set:

You can also run the same tutorial with GPU acceleration:

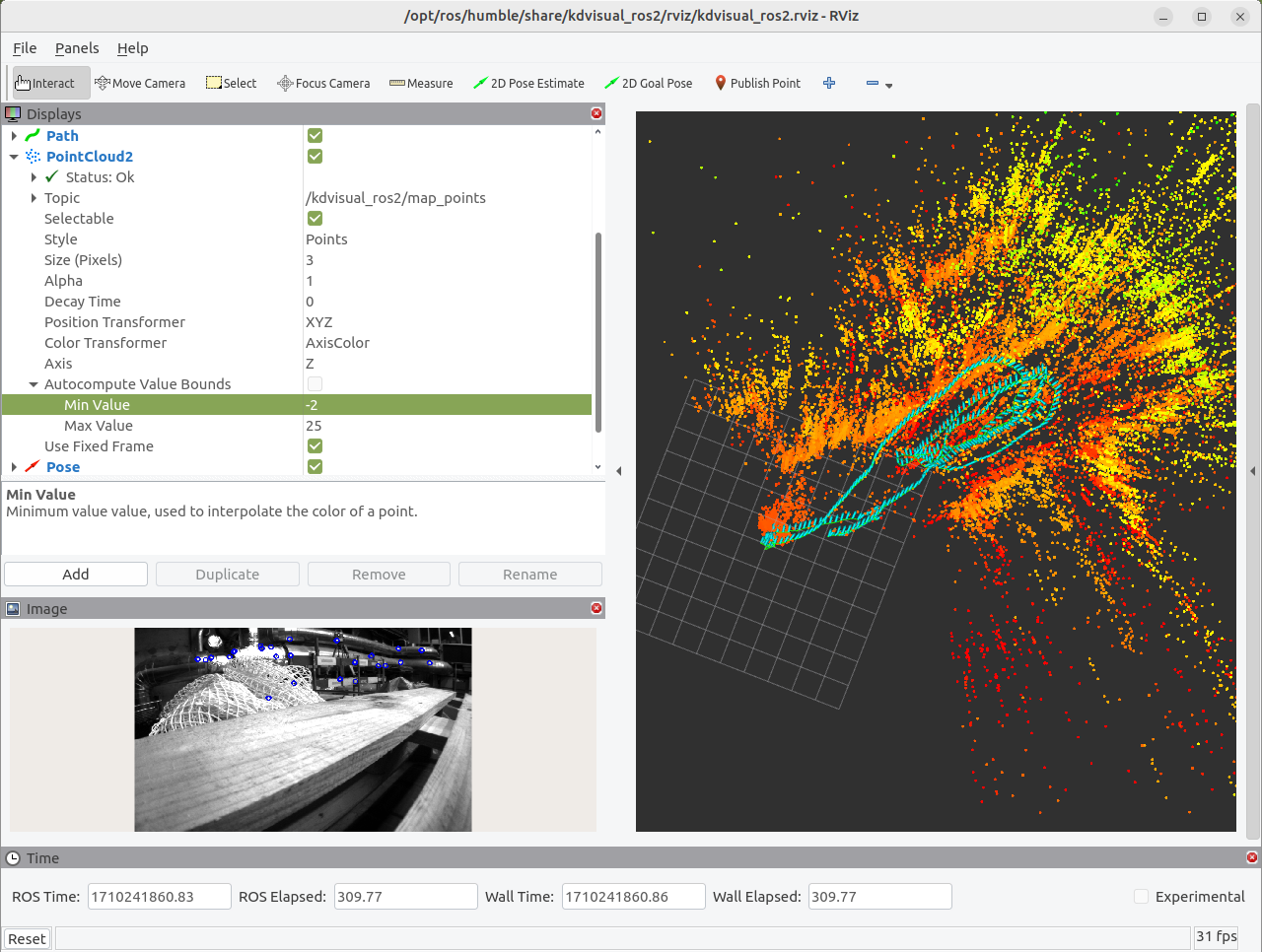

In the rviz2 window, you can see how the Kudan Visual SLAM system estimates the path of the robot and creates the point cloud representing the walls and obstacles within the environment.

To close the tutorial, minimize the rviz2 window, and press Ctrl-c

in the terminal where you have started the script. Wait a few seconds

until all related processes have shut down.

Tutorial with direct input of pre-recorded ROS 2 bags¶

The shell scripts of the tutorials described so far use the

ros2 bag play command to playback the ROS 2 bags. This means that

the ROS 2 DDS middleware is used to transmit the input data into the Kudan Visual SLAM

system. As an alternative, Kudan offers the possibility to

directly input the ROS 2 bag data into the Kudan Visual SLAM system.

This feature is applied by the shell script

kudan-slam-direct-input.sh. This shell script has the same syntax

as the script kudan-slam-generic.sh, which was described in the

section above.

If not already done, setup the ROS 2 environment:

To execute the Kudan Visual SLAM system using the ROS 2 bag robot_moving_15fps

with direct input, run:

Since this script does not apply the ros2 bag play command and the

ROS 2 DDS middleware, it does not include any clock mechanism for streaming

the video frames from the ROS 2 bag into the SLAM system. In consequence,

the script will execute faster than real-time, because the processing speed

is only controlled by the performance of the CPU and - if the

GPU ORB Extractor is used - of the GPU.

To execute this tutorial with GPU acceleration, run:

Tutorial with Kudan Visual SLAM, AAEON robotic kit, and Intel® RealSense™ camera¶

This tutorial describes how the Kudan Visual SLAM system can be integrated into an

AAEON UP Xtreme* i11 Robotic Development Kit.

This kit comes with an Intel® RealSense™ depth camera D435i, which provides the

input stream for the Kudan Visual SLAM system. The Intel® Robotics SDK provides the

Deb package ros-humble-realsense2-camera, which implements the

realsense camera node and publishes the data from the camera as ROS 2

topics.

The AAEON UP Xtreme* i11 Robotic Development Kit also includes a motor control board, which

implements the motor drivers and the interface towards the compute board.

To support this motor control board, the Intel® Robotics SDK provides the Deb package

ros-humble-aaeon-ros2-amr-interface, which is based on the GitHub project

AAEONAEU-SW/ros2_amr_interface

with some adaptations for ROS 2 Humble. We use this package to launch

the ROS 2 node AMR_node, which receives all movement-related ROS 2 topics

and controls the motor drivers accordingly.

In this tutorial, we will use the keyboard to control the movements of

the robot manually. This can be done by the ROS 2 command

teleop_twist_keyboard, which generates another ROS 2 node that publishes

the movement-related topics to the AMR_node.

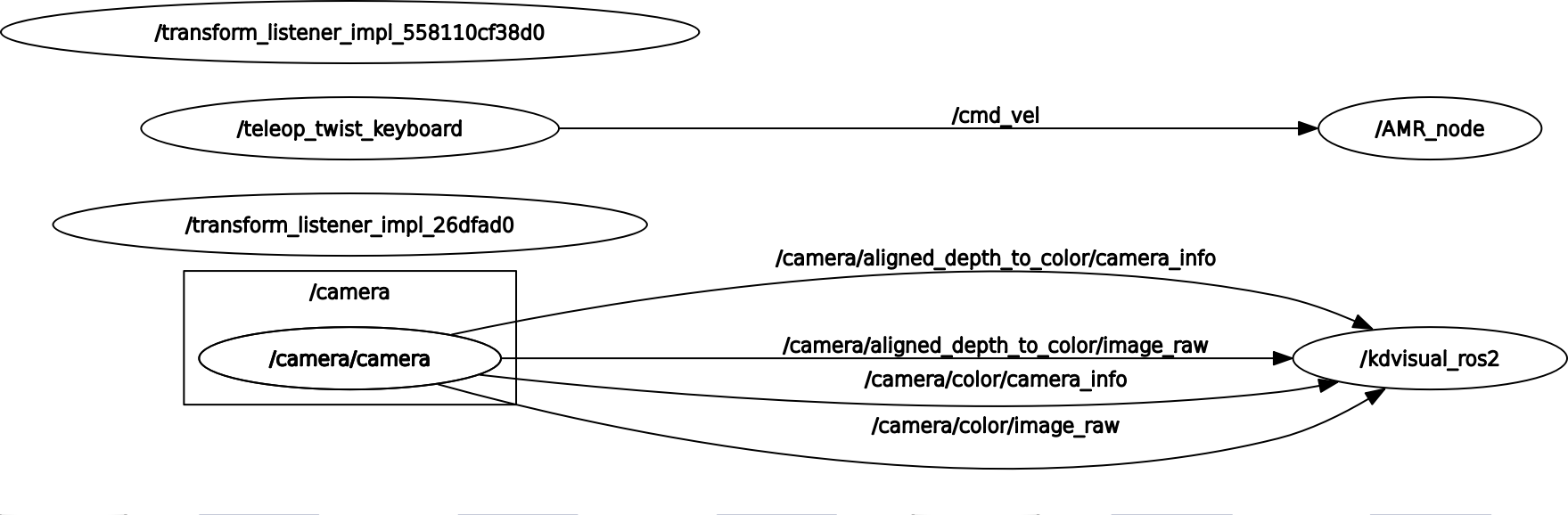

The following figure shows the nodes that are involved in this tutorial:

The teleop_twist_keyboard node picks up the user’s commands via the keyboard and generates the movement-related topics that are sent to the AMR_node.

The AMR_node represents the motor controller of the robot.

The camera node provides the data from the Intel® RealSense™ camera as ROS 2 topics.

The kdvisual_ros2 node represents the Kudan Visual SLAM system.

Install the required Deb packages as described in the Install the tutorial with Kudan Visual SLAM, AAEON robotic kit, and Intel® RealSense™ camera section.

Setup the ROS 2 environment and run the tutorial script, which launches Kudan Visual SLAM together with the Intel® RealSense™ camera node and the AMR_node. The script also launches the rviz2 tool, which will show the camera image and the map together with the key frames and the robot’s path identified by the Kudan Visual SLAM system.

In a second shell, set up the ROS 2 environment and run the

teleop_twist_keyboard command:

We strongly recommend to decrease the robot’s speed. This can be done by

pressing the z key several times in the teleop_twist_keyboard

command window. Then you can control the robot using these keys:

u |

i |

o |

j |

k |

l |

m |

, |

. |

The following image shows the map that has been identified by the

Kudan Visual SLAM system while we have executed this tutorial in an environment

with several walls and obstacles.

The walls and obstacles are represented in green and yellow color. The

the cyan arrows represent the key frames that have been identified

while we have moved the robot using the teleop_twist_keyboard

application.

The following video shows the camera stream and the localization and mapping process of the Kudan Visual SLAM system while we have moved the robot through the environment.

Troubleshooting¶

Running Kudan Visual SLAM in a containerized environment¶

If you plan to use the Intel® Robotics SDK in a containerized environment, the container

with Kudan Visual SLAM must be run with an extended group membership.

To allow the GPU ORB Extractor to access the GPU, the container must belong

to the render group. Otherwise, you might see an error message similar to

/workspace/src/gpu/l0_rt_helpers.h:56: L0 error 78000001.

To give the container the appropriate group membership the container

can be started in the following way:

L0 error 78000001 in a native environment¶

Also in a native environment it is possible that you will see an error message

similar to ./src/gpu/l0_rt_helpers.h:56: L0 error 78000001. This can happen

after you have just installed the Deb package liborb-lze – or any of the

ros-humble-kdvisual-* Deb packages, which install liborb-lze as their

dependency. This error can be fixed by rebooting the system.

The error can also happen if you access the system via an SSH connection

and the current user does not belong to the render group. Use the

groups command to display the groups membership of the current user.

If the user does not belong to this group, add the group membership by

means of:

Issues with the Kudan license manager¶

The error message

“Failed to load license: Missing or invalid license file!”

indicates that the Kudan license manager has detected an issue with

the status of certain system files. To fix the issue, ensure that your file

system does not include any files that have a modification date in the future.

You can use the Linux* command find to identify such files in your file

system. In general, you should refrain from manually changing the date of

your system clock.

Issues with accessing the AAEON robotic kit¶

In case the tutorial with Kudan Visual SLAM, AAEON robotic kit, and Intel® RealSense™ camera encounters issues accessing the AAEON motor control board, refer to the Troubleshooting AAEON Motor Control Board Issues section.

Tracking is occasionally lost¶

On some processing platforms, the Kudan Visual SLAM system occasionally reports the warning “publish old TF data, trackingState is Lost”. Despite this warning, Kudan Visual SLAM can recover from this situation, so that the tracking is continued. This issue is related to the ROS 2 DDS middleware. It does not occur if the Kudan Visual SLAM node can directly read from the ROS 2 bag using the direct input mechanism.

The issue primarily occurs on processors that are based on the Intel® Performance Hybrid Architecture, which has been introduced in 12th Gen Intel® Core™ processors. For these processors and their successors, the issue can be mitigated by limiting the CPU affinity of the Kudan Visual SLAM processes to the P-cores. In consequence, the Kudan Visual SLAM processes won’t be executed by the E-cores.

To give an example, the Intel® Core™ i7-1270PE processor is based on the Performance Hybrid Architecture with four P-cores and eight E-cores. If Hyper-Threading is enabled, this processor has eight logical CPUs that correspond to the four physical P-cores. These logical CPUs are numbered from 0 to 7.

To identify the logical CPUs of your system, you can use the command:

To set the CPU affinity of the Kudan Visual SLAM processes, edit the launch file with sudo rights:

Search for the lines that launch the Kudan Visual SLAM node with the GPU ORB Extractor

and replace them with:

The enumeration after the taskset -c specifies the logical CPUs that

shall be used for running the processes of the Kudan Visual SLAM node. If your

processor has a different number of logical CPUs corresponding to the

P-cores, you have to adapt this enumeration.

In the same way, search for the lines that launch the Kudan Visual SLAM node with the CPU-based ORB feature extraction

and replace them with:

Again, the enumeration after the taskset -c has to be adapted to the

number of logical CPUs corresponding to the P-cores.

General issues¶

For general robot issues, go to: Troubleshooting for Robot Tutorials .